Command Palette

Search for a command to run...

VQA (v2.0) Open Question Answering Dataset

Date

Size

Publish URL

Paper URL

License

Other

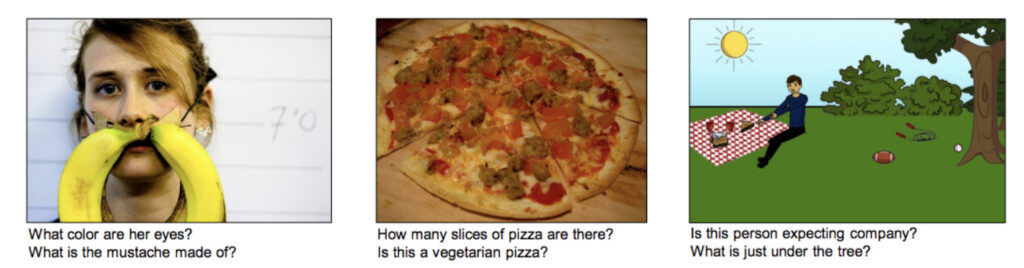

VQA (v2.0) stands for Visual Question Answering (v2.0), which is a manually annotated, open-ended question-answering dataset about images. To answer these questions, you need to have a certain understanding of images, language, and common sense.

Example image:

The dataset includes:

- 265,016 images (from COCO and abstract scenes datasets)

- The number of questions per image is greater than or equal to 3 (average 5.4 questions)

- Each question contains 10 ground truths

- Each question has 3 plausible (but not necessarily correct) answers

- Automatic evaluation metrics

The VQA dataset was first released in October 2015, and VQA v2.0 was released in April 2017.This dataset is version v2.0.Compared to VQA v1.0, v2.0 supplements each question with images to minimize language bias.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.