Command Palette

Search for a command to run...

Firefly Chinese Llama2 Incremental pre-training Dataset

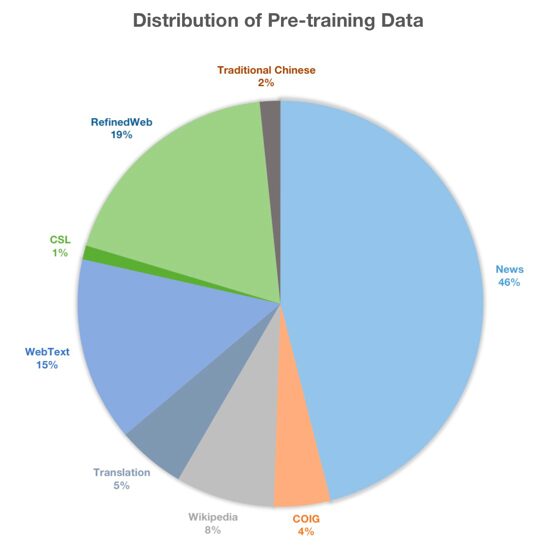

The dataset is Firefly-LLaMA2-Chinese project The incremental pre-training data totals about 22GB of text, mainly including open source data sets such as CLUE, ThucNews, CNews, COIG, Wikipedia, and ancient poems, prose, classical Chinese, etc. collected by the research team. The data distribution is shown in the figure below.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.