Command Palette

Search for a command to run...

HumanSense Benchmark Dataset

Date

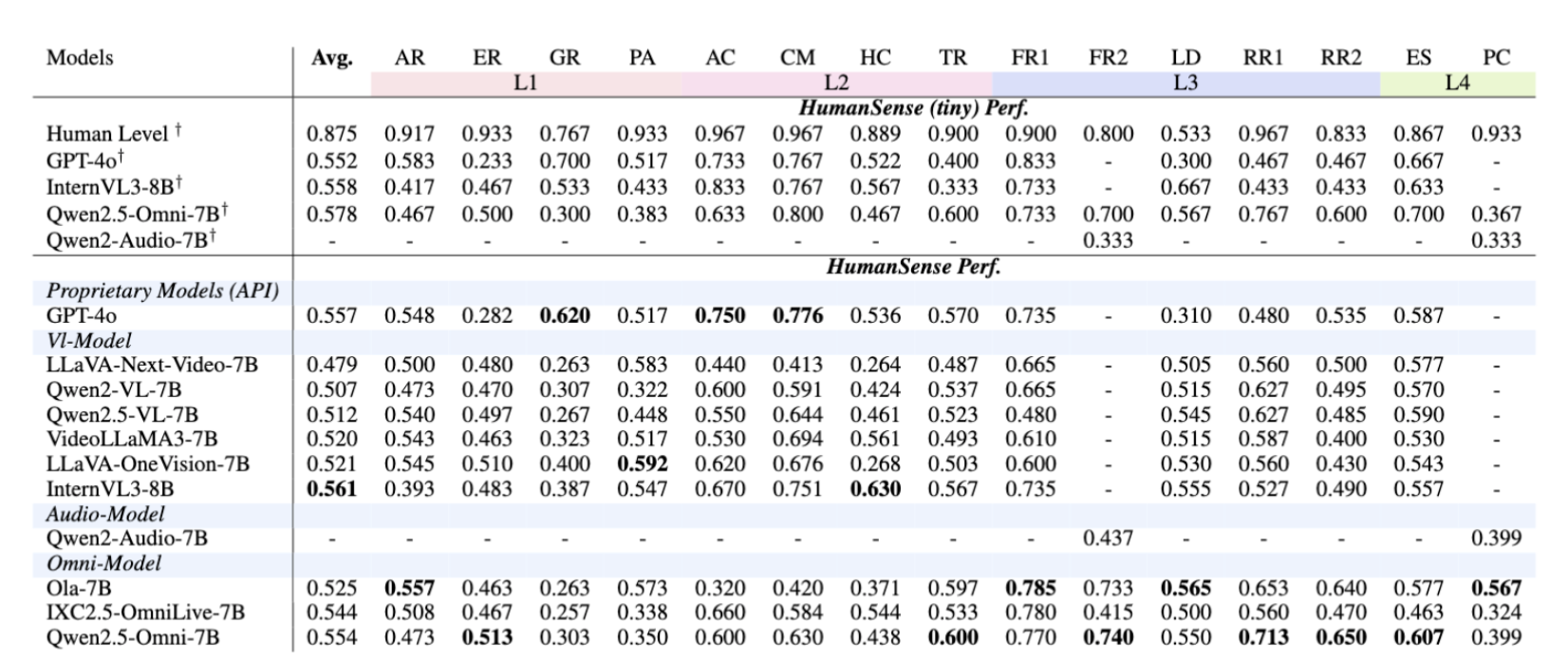

HumanSense Benchmark is a human perception evaluation benchmark dataset released in 2025 by Xi'an Jiaotong University in collaboration with Ant Group. The related research paper is titled "HumanSense: From Multimodal Perception to Empathetic Context-Aware Responses through Reasoning MLLMsThe goal is to comprehensively measure the model's real-world interactive capabilities under the fusion of multimodal information such as vision, audio, and text.

This dataset contains 3,291 video-based questions and 591 audio-based questions, covering 15 tasks of increasing difficulty. The task structure is a four-layer pyramid, including:

- L1–L2 Perception Layers: Fundamental and complex perceptual capabilities for vision, audio, and cross-modal perception;

- L3 Understanding Layer: The ability to understand implicit relationships, emotions, and states based on interactive situations;

- L4 Response Layer: Strategic and contextualized response capabilities in interactive scenarios.

This dataset constructs questions from real videos, audio, and multimodal dialogues. It is generated through various open-source datasets and real-world scene recordings, covering a wide range of human-centered interaction tasks, from appearance recognition and emotion recognition to relationship understanding and psychological dialogue. It is one of the current multimodal evaluation benchmarks that is closer to real human communication scenarios.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.