Command Palette

Search for a command to run...

VAP-Data Visual Action Performance Dataset

VAP-Data, released in 2025 by ByteDance in collaboration with the Chinese University of Hong Kong, is currently the largest semantically controlled video generation dataset. The related research paper is titled "Video-As-Prompt: Unified Semantic Control for Video GenerationThe goal is to provide high-quality training and evaluation benchmarks for controlled video generation, controlled motion synthesis, and multimodal video models.

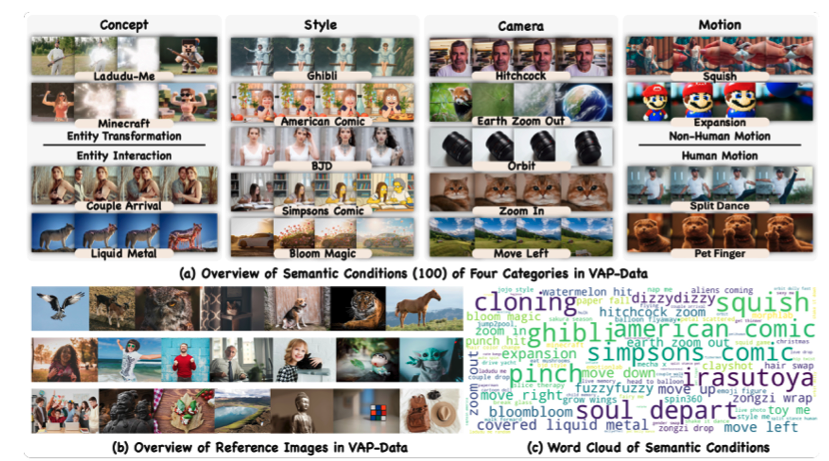

This dataset contains over 90,000 carefully curated paired samples, covering 100 fine-grained semantic conditions across four semantic categories: concept, style, action, and shot. Each semantic category includes multiple sets of mutually aligned video instances. The video content exhibits great diversity in lighting, perspective, scene, and dynamics, enabling the construction of cross-semantic, finely controlled video generation systems and providing a comprehensive evaluation environment for the model's controllability and generalization ability.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.