Command Palette

Search for a command to run...

3EED Language-Driven 3D Understanding Dataset

Date

Size

Paper URL

License

Apache 2.0

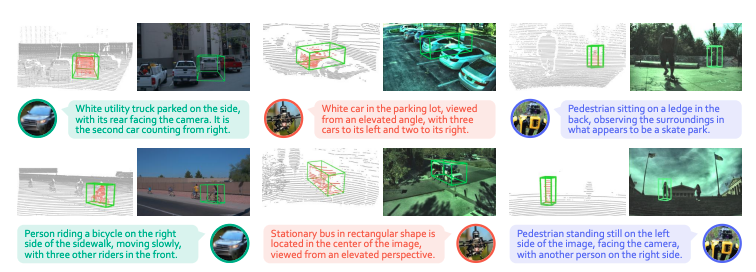

3EED is a multi-platform, multi-modal 3D visual grounding dataset released in 2025 by the Hong Kong University of Science and Technology (Guangzhou) in collaboration with Nanyang Technological University and other institutions. The related research paper is titled "3EED: Ground Everything Everywhere in 3DThe method has been accepted by NeurIPS 2025 and aims to support models in performing language-driven 3D target localization tasks in real-world outdoor scenarios, and to comprehensively evaluate the model's cross-platform robustness and spatial understanding capabilities.

This dataset contains 20,367 time-aligned multimodal frames, covering three platforms: vehicles, drones, and quadrupeds. It provides 128,735 3D bounding boxes and 22,439 manually verified language reference expressions, nearly 10 times larger than existing datasets of the same type. Furthermore, the data scene space ranges up to 280 m × 240 m × 80+ m, an order of magnitude larger than existing outdoor 3D reference datasets, providing unique conditions for studying real 3D understanding from long distances, at multiple scales, and from multiple perspectives.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.