Command Palette

Search for a command to run...

The First Multimodal Astronomical Model, AION-1, Has Been Successfully Developed! Researchers From the University of California, Berkeley, and Others Have Successfully Constructed a Generalizable Multimodal Astronomical AI Framework Based on pre-training on 200 Million Astronomical targets.

The Transformer architecture-based fundamental models have brought about profound changes in fields such as natural language processing and computer vision, propelling technology from a customized paradigm of "one model for one task" to a new stage of generalization. However, when these models enter the field of scientific research, they encounter significant challenges. Scientific observation data comes from diverse sources, has varying formats, and often contains various observational noises, resulting in significant "complex heterogeneity" in the data.This reality puts scientific data analysis in a dilemma:If only a single type of data is processed, it is difficult to fully explore its potential value; if a manually designed cross-modal fusion scheme is relied upon, it is difficult to flexibly adapt to diverse observation scenarios.

Among many scientific fields, astronomy provides an ideal testing ground for such models. Its massive amounts of publicly available observational data provide ample "nutrients" for model training; at the same time, its observational methods are extremely diverse, covering a variety of approaches such as galaxy imaging, stellar spectroscopy, and astrophotometry. This multi-dimensional data format naturally meets the needs of multimodal technology development.

In fact, some studies have attempted to build multimodal models of astronomy, but these attempts still have obvious limitations: most focus on single phenomena such as supernova explosions and rely on "contrastive objectives" as the core technology, which makes it difficult for the models to flexibly cope with arbitrary combinations of modes and to capture key scientific information between modes other than shallow correlations.

To overcome this bottleneck, teams from more than ten research institutions worldwide, including the University of California, Berkeley, the University of Cambridge, and the University of Oxford, collaborated on the project.AION-1 (Astronomical Omni-modal Network), the first large-scale multimodal foundational model family for astronomy, was launched.By integrating and modeling heterogeneous observational information such as images, spectra, and star catalog data through a unified early fusion backbone network, it not only performs well in zero-shot scenarios, but its linear detection accuracy is also comparable to models specifically trained for specific tasks.

The related research findings, titled "AION-1: Omnimodal Foundation Model for Astronomical Sciences," have been included in NeurIPS 2025.

Research highlights:

* Propose the AION-1 model family, a series of token-based multimodal scientific foundational models with parameter scales ranging from 300 million to 3.1 billion, specifically designed to process highly heterogeneous astronomical observation data and supporting arbitrary modal combinations.

* A customized tokenization method was developed that can transform astronomical data from diverse sources and in different formats into a unified representation, constructing a single, coherent data corpus, effectively overcoming common problems in scientific data such as heterogeneity, instrument noise, and source differences.

* AION-1 performs exceptionally well across a wide range of scientific tasks. Even with simple forward probing, its performance reaches state-of-the-art (SOTA) levels, significantly outperforming supervised baselines in low-data scenarios. This characteristic allows downstream researchers to use it directly and efficiently without complex fine-tuning.

* AION-1 provides a feasible multimodal modeling paradigm for astronomy and other scientific fields by systematically addressing core challenges such as data heterogeneity, noise, and instrument diversity.

Paper address:

https://openreview.net/forum?id=6gJ2ZykQ5W

Follow our official WeChat account and reply "AION-1" in the background to get the full PDF.

More AI frontier papers:

https://hyper.ai/papers

AION-1 Pre-training Cornerstone: MMU Dataset and Tokenization Scheme for Multi-type Astronomical Data

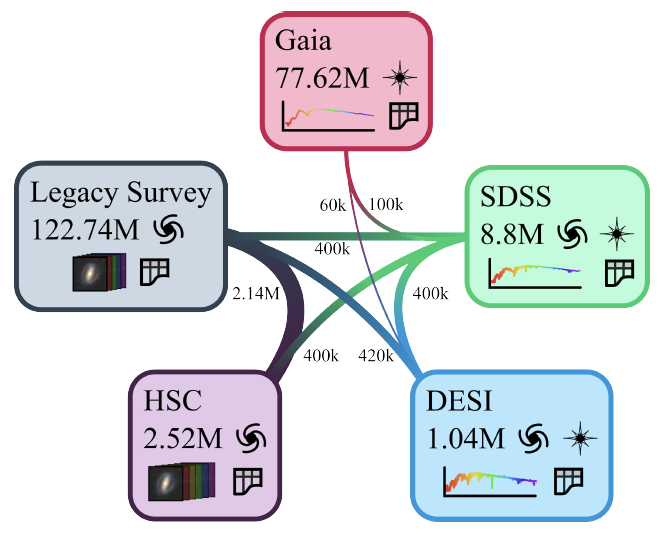

AION-1's pre-training is based on the Multimodal Universe (MMU) dataset.As shown in the figure below, this is a collection of publicly available astronomical data built specifically for machine learning tasks, integrating diverse observational information from five major sky survey projects.

Specifically, this includes: galaxy images provided by the Supernova Camera (HSC) and the Legacy Imaging Survey; high-resolution spectra and corresponding distance information of celestial objects from the Dark Energy Spectroradiometer (DESI) and the Sloan Digital Sky Survey (SDSS); and low-resolution spectra recorded by the Gaia satellite, which also includes high-precision photometric and position data of stars in the Milky Way.

To achieve unified processing of these multi-source, multi-format data, AION-1 proposes a universal tokenization scheme.This scheme can convert astronomical data in different forms, such as images, spectra, and numerical data, into a unified representation that the model can recognize and process.This effectively addresses the core challenge of diverse astronomical data sources and varying formats. The tokenization process employs a dedicated converter for each data type, enabling it to adapt to data outputs from different instruments, ensuring semantic alignment of similar data, and avoiding repeated model training for the same type of data from different sources.

For multiband imaging dataTo address the differences in resolution, channel count, wavelength coverage, and noise levels among galaxy images, the image tokenizer employs a flexible channel embedding design. This design adapts to inputs with varying channel counts and incorporates information such as telescope origin into the representation. Its core network is based on an improved ResNet structure, coupled with a finite-value quantization technique, enabling a single model to uniformly process image data from multiple observation pipelines. During training, the model utilizes a loss evaluation method that considers noise weights, fully leveraging known noise information from the imaging process to improve reconstruction quality.

For spectral dataDifferences in signal strength, wavelength range, and resolution among different instruments are addressed by standardizing and mapping them onto a shared wavelength grid, thereby enabling joint processing of multi-instrument and multi-target spectra. This tokenizer, based on the ConvNeXt V2 network structure, employs a quantization technique that requires no pre-defined encoding and also uses a weighted noise loss function to fuse the noise characteristics of different sky surveys.

In the processing of tabular/scalar data,AION-1 abandons traditional continuous representation methods that are difficult to adapt to large numerical ranges, and instead adopts a piecewise discretization strategy based on data distribution statistics. This method makes the numerical distribution more uniform and minimizes transformation errors in regions where information is concentrated.

In addition to standard photometric images, the model also features a dedicated tokenizer for spatially distributed numerical field data such as segmentation maps and property maps. It is suitable for normalized images with values ranging from 0 to 1, is built upon a convolutional network, employs a quantization technique similar to that used in image tokenizers, and is trained using grayscale galaxy images and their corresponding segmentation maps.

For elliptical bounding box data used for celestial positioning,Each target is described by five parameters: position coordinates, ellipse shape, and size. The tokenization process is implemented by mapping the coordinates to the nearest pixel and quantizing the ellipse attributes. To handle cases where the number of targets in the image is variable, all detected targets are converted into a sequence and sorted according to their distance from the image center, thus forming a unified and standardized representation structure.

AION-1: A Multimodal Fundamental Model for Astronomical Sciences

AION-1's architecture draws on the ideas of current mainstream early fusion multimodal models, and specifically adopts the scalable multimodal masked modeling scheme proposed by the 4M model (a multimodal AI training framework developed by Apple and EPFL).Its core idea is:After converting all types of data into a unified token representation, a portion of the content is randomly masked, and the model then learns to recover the masked portion. In this way, the model can automatically discover the inherent relationships between different forms of data, such as images, spectra, and numerical data.

Specifically,Each training sample contains M different types of data sequences.During training, the model randomly selects two parts: one part as input information and the other part as the target to be reconstructed. Because both parts are randomly selected from the entire dataset, the model can grasp the characteristics of each type of data and understand the correspondence between different data types.

In terms of technical implementation, as shown in the figure below,The AION-1 employs a Transformer encoder-decoder architecture designed specifically for multitasking.In addition to standard encoders and decoders, its innovation lies in designing unique embedding mechanisms for each data type. For each data type, the model is equipped with a dedicated transformation function, learnable type identifier parameters, and positional parameters.

Of particular note is that the model assigns a unique type identifier to each combination of data type and data source. Even within the same image data set, different sources from different observation devices will result in different identifiers. This design allows the model to identify the source characteristics of the data, which often implicitly contain important attributes such as data quality and resolution.

The training efficiency of a model largely depends on the appropriate selection of data content to be masked. Research has found that the sampling method used in the original 4M model performed poorly when dealing with data of varying lengths, easily generating a large number of invalid training samples. Therefore,AION-1 proposes a more efficient simplification strategy:When determining the input content, a total upper limit is first set. Then, a data type is randomly selected, and a portion of its content is drawn from it. Any remaining content is supplemented from other data types. When determining the target content to be reconstructed, a sampling method biased towards smaller scales is used to determine the number of reconstructed data types needed. This method reduces the computational cost per training sample while ensuring consistency between the training process and real-world usage scenarios.

To comprehensively evaluate the model's performance, the research team trained three different versions of AION-1:Basic version (300 million parameters), large version (800 million parameters), and super large version (3 billion parameters).The AdamW optimizer was used during training, with appropriate learning parameters set, for a total of 205,000 training steps. The learning rate was adjusted using a strategy of initially increasing the learning rate and then decreasing it. As shown in the figure below, this study demonstrates the impact of different model sizes and the inclusion of Gaia satellite data on performance, providing a reference for subsequent model selection.

After complete training, AION-1 possesses a variety of practical generation functions, supporting tasks ranging from data completion to cross-device data transformation. Its core advantage lies in its ability to understand the overall relationships between all data types, thus enabling the generation of other types of data samples with consistent physical properties even with only partial observation data.

Experimental results: Redshift accuracy improved by 16 times, a significant breakthrough in the performance of multimodal astronomical AI.

AION-1's breakthrough lies in its ability to directly generate general representations with clear physical meaning and unrestricted by data type, without relying on complex supervision processes designed for specific tasks. Based on this mechanism, the model performs excellently in two key scenarios: cross-modal generation and scalar posterior estimation.

In terms of cross-modal generation,AION-1 enables conditional generation of high-dimensional data, effectively supporting cross-device data conversion and observation quality improvement. The most representative application is generating high-resolution DESI spectra from low-resolution Gaia satellite data: as shown in the figure below, although the former data has 50-100 times higher sparsity, the model can still accurately reconstruct the spectral line centers, widths, and amplitudes. This advancement makes it possible to conduct detailed astronomical analysis based on widely available low-resolution data.This is of great significance for reducing research costs and improving data utilization.

Regarding parameter estimation,AION-1 can directly infer the value distribution of quantized scalars. Taking redshift estimation as an example, as shown in the figure below, the results for typical galaxies under three conditions of increasing information content are as follows: the distribution is relatively scattered when only basic photometric data is used; it converges significantly after adding multi-band imaging; and the estimation accuracy is significantly improved after further introducing high-resolution spectra, indicating that the model can effectively integrate multi-source information to optimize the estimation results.

To verify the model's capabilities,The research team also conducted experiments in four directions:

* Physical Property Estimation

For parameters such as stellar mass and surface temperature, which typically require high-resolution observations to derive, this study explores the use of AION-1 to estimate them directly from low-resolution data. Tests on 120,000 galaxy samples show that it outperforms or matches dedicated supervised models; in tests on 240,000 stellar samples, it even surpasses specially optimized baseline models in the task of "predicting high-resolution parameters based on Gaia low-resolution data".

* Semantic learning based on expert annotations (Learning from Semantic Human Labels)

In the galaxy morphology classification task (8,000 labeled samples), AION-1's accuracy not only surpasses that of dedicated models trained from scratch, but also rivals that of state-of-the-art models trained with tens of times more labeled data. In the galaxy structure semantic segmentation task (2,800 samples), its generated results are highly consistent with human-labeled data, and its performance exceeds that of simple fully convolutional baselines.

* Performance in Low-Data Regime

Addressing the common problem of scarce annotations in astronomical research, experiments show that AION-1 has a more significant advantage when data is limited, and its performance can match or even surpass supervised models that require an order of magnitude more training data.

* Similarity-Based Retrieval

Faced with the challenges of rare celestial objects such as strong gravitational lensing (accounting for approximately 0.11 TP3T) and a lack of sufficient annotations, AION-1 demonstrates superior performance in three categories of targets—spiral galaxies, merging galaxies, and strong lensing candidates—through spatial similarity retrieval, surpassing other advanced self-supervised models in retrieval results.

These experimental results collectively demonstrate that AION-1 provides a unified and efficient solution for multimodal astronomical data analysis, especially showing significant advantages in scenarios with scarce data and cross-modal inference.

Multimodal AI empowers astronomical research: a collaborative breakthrough between academia and industry.

In recent years, "multimodal AI-driven astronomical research" has become a focus of attention for the global academic and industrial communities, and a series of groundbreaking achievements are reshaping the processing and application of astronomical data.

In academia, researchers are dedicated to closely integrating multimodal fusion capabilities with specific astronomical problems. For example, the space exploration plan announced by MIT Media Lab in 2025...By combining multimodal AI with extended reality technology, an intelligent analysis system for lunar residency missions has been developed.This system can integrate multi-source information such as satellite remote sensing images, environmental sensor readings, and equipment operating status to provide real-time decision support for resource management and risk warning in a simulated lunar base.

Meanwhile, a research team from Oxford University and other institutions has developed a deep learning-based screening tool that can accurately identify valid signals originating from supernova explosions from thousands of data alerts, reducing the amount of data that astronomers need to process by approximately 851 TP3T.This virtual research assistant only requires 15,000 training samples and the computing power of a regular laptop to complete the training.The routine manual screening process was transformed into an automated workflow. The final model maintained high accuracy while keeping the false alarm rate to around 1%, significantly improving research efficiency.

Paper Title:The ATLAS Virtual Research Assistant

Paper link:https://iopscience.iop.org/article/10.3847/1538-4357/adf2a1

The industry is promoting the practical deployment of multimodal AI in the field of astronomy through productization.In 2024, NVIDIA partnered with the European Southern Observatory (ESO) to integrate its AI inference optimization technology into the spectral data processing workflow of the Very Large Telescope.By leveraging TensorRT to accelerate multimodal fusion models, the efficiency of spectral classification of distant galaxies has been improved by up to three times.

In 2025, IBM further collaborated with ESO to optimize the VLT (Virtual Lightning Observation) scheduling system using multimodal AI. By integrating diverse information such as weather forecasts, changes in celestial brightness, and equipment load, the system can dynamically adjust observation plans.This increases the success rate of capturing time-domain targets such as variable stars by 30%.

Furthermore, Google DeepMind collaborated with LIGO and GSSI to propose a control method called "deep loop shaping" to improve the control accuracy of gravitational wave detectors. This controller was validated on a real LIGO system, and its actual performance highly consistent with simulation results. Compared to the original system,The new technology improves noise control capabilities by 30 to 100 times.And for the first time, it completely eliminated the most unstable and difficult-to-suppress feedback loop noise source in the system.

Paper Title:Improving cosmological reach of a gravitational wave observatory using Deep Loop Shaping

Paper address:https://www.science.org/doi/10.1126/science.adw1291

It is evident that constructing multimodal general representations has become a clear trend in the intersection of astronomy and artificial intelligence. Academia is continuously refining the core technology by delving into scientific questions, while industry is leveraging its engineering capabilities to drive the implementation and large-scale application of the technology. This collaborative advancement is gradually breaking down the traditional constraints of high-cost observations and complex data processing on astronomical research, enabling more researchers to explore the deeper mysteries of the universe with the help of artificial intelligence.

Reference Links:

1.https://www.media.mit.edu/groups/space-exploration/updates/

2.https://www.eso.org/public/news/eso2408/

3.https://www.eso.org/public/news/eso2502/