Command Palette

Search for a command to run...

Stanford, Peking University, UCL, and UC Berkeley Collaborated to Use CNN to Accurately Identify Seven Rare Lenticular Samples From 810,000 quasars.

Einstein's groundbreaking theory of general relativity, proposed in 1915, revealed that mass not only generates gravity but also warps the spacetime around it, causing the motion of light and matter to follow curved spacetime paths. Therefore, massive celestial bodies act like natural lenses, deflecting light rays passing nearby.

In modern astronomy, the strong gravitational lens is a crucial tool for studying the large-scale structure of the universe and the co-evolution of black holes and galaxies. Quasars, acting as strong gravitational lenses, provide extremely rare observational opportunities to study the evolution of the scaling relationship (especially the MBH–Mhost relationship) between supermassive black holes and their host galaxies with redshift. Using this powerful probe, the mass of the host galaxy can be accurately inferred from the Einstein radius θE.However, quasars are extremely rare, and their identification has always been a huge challenge for astronomers—among the nearly 300,000 quasars cataloged in the Sloan Digital Sky Survey (SDSS), only 12 candidates were found, and only 3 were ultimately confirmed.

Against this backdrop, a team comprised of numerous research institutions, including Stanford University, SLAC National Accelerator Laboratory, Peking University, Brera Observatory of the Italian National Institute for Astrophysics, University College London, and the University of California, Berkeley, has significantly expanded this originally tiny sample by utilizing innovative machine learning methods and data from the Dark Energy Spectrometer.

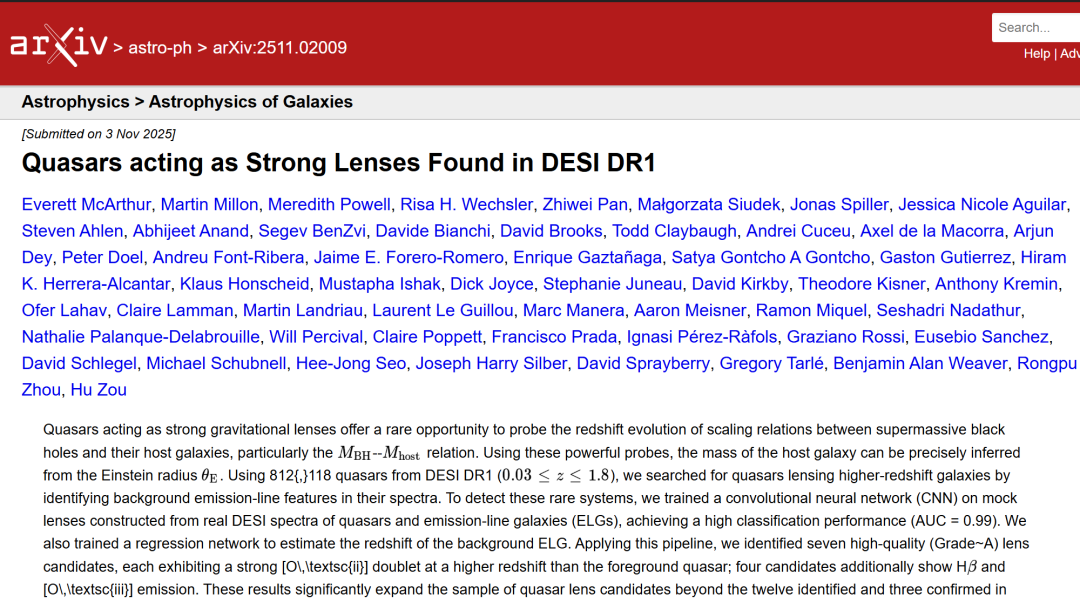

The research team developed a data-driven workflow for identifying quasars that act as strong gravitational lensing objects in the spectral data of DESI DR1.The redshift coverage range is 0.03 ≤ z ≤ 1.8. This method utilizes a convolutional neural network (CNN) trained on a realistic simulated lens constructed from real DESI QSO and ELG spectra. Applying this process, researchers identified seven high-quality (Grade A) quasar lensing candidates, all exhibiting strong [OII] double-line emission above the foreground quasar's redshift; four of these candidates also exhibit additional Hβ, [OIII]λ4959Å, and [OIII]λ5007Å emission lines.

Note: DESI DR1 is the first batch of spectroscopic survey data released by the Dark Energy Spectral Survey (DESI).

The relevant research findings, titled "Quasars acting as Strong Lenses Found in DESI DR1", have been published in print on arXiv.

Research highlights:

* Expanded the sample of quasar lenses identified in previous studies (only 12 candidates, 3 of which were confirmed).

* This opens the door to establishing the first statistical sample of QSO strong lensing and demonstrates the potential of data-driven methods for large-scale identification of such rare systems.

The resulting samples will provide a powerful new avenue for studying the co-evolution of black holes and galaxies, anchoring scale relationships through direct mass measurements across cosmic time.

Paper address:

https://arxiv.org/abs/2511.02009

Follow our official WeChat account and reply "quasar" in the background to get the full PDF.

More AI frontier papers:

https://hyper.ai/papers

Dataset: 812,118 quasars selected from DESI DR1

The first DESI data release provides approximately 1.8 million quasar spectra, covering a wide range of redshifts.This study selected 812,118 quasars based on the HEALPixel redshift catalog of the DESI DR1 main survey and adopted the "dark time" procedure to avoid the influence of moonlight on the spectrum due to increased blue camera noise ("bright time" procedure).

Researchers used information provided by Redrock, whose output includes the quasar's TARGETID, redshift (z), and redshift error (Guy et al. 2023). Based on this information, only objects with OBJTYPE = TGT and ZCAT PRIMARY = 1 were selected to exclude sky targets and spectra that were not dominant or failed to fit the redshift. Finally, objects with ZWARN = 0 and SPECTYPE = QSO were further filtered to exclude objects that might be labeled as quasars but had different spectral classifications. This filtering method ensured the accuracy of the redshift and ensured that the training samples came only from quasar spectra with no anomalies in the redshift calculation.

After selecting quasarsResearchers used the FastSpec catalog to construct a sample of emission line galaxies (ELGs);This step is crucial for constructing the simulated lens. The catalog is based on FastSpecFit1, a lightweight processing pipeline that provides spectral information for DESI objects, including emission line flux, redshift, and classification. FastSpecFit uses templates to fit specific parameters and spectral models to construct noise-free spectra. Researchers first selected ELGs using the same method and redshift range as for quasars, but with SPECTYPE = GALAXY. This screening resulted in 16,500 emission line galaxies, but only ELGs with fluxes > 2 × 10^-17 erg cm^-2 s^-1 from the main survey OII 3726 were retained to ensure the selected emission lines were above the data noise level.

Real-world observational data was used during training to encompass all possible astrophysical and instrumental noise in the training set. However, the same batch of data could not be used for both training and actual lens searches simultaneously. Therefore, the dataset was divided and used in two phases—phase 1 used 47% out of 812,118 objects, with the remainder used in phase 2.

Phase 1:

* Training samples:The classification network and the redshift prediction network were trained using 70% from 384,873 quasars in the Phase 1 training samples.

* Validation sample:Phase 1: The remaining 30% in the training samples are used to validate model performance during training.

* Blind samples:The blind sample consists of 427,245 quasars that were not used in training, validation, and testing. Real lenses are then searched for in this dataset after training is complete.

* Test sample:During testing, 3,170 quasars from the Phase 1 blind sample were used, and a simulated lensing system was constructed for 10% of them. This test sample was used to evaluate network performance after hyperparameter optimization.

Phase 2:

In Phase 2, the training samples and blind samples are swapped, and the same process is repeated.

* Training samples:The CNN was trained using 70% samples from 427,245 quasars in the Phase 1 blind sample.

* Validation sample:The 30% sample from the 427,245 samples used for validation was employed.

* Blind samples:The blind sample consists of 384,873 quasars that were not used in training, validation, and testing.

* Test sample:During Phase 2 testing, 3,547 quasars from the Phase 2 blind samples were used, and these samples were independent of the training and validation subsets. Among them, 10% quasars were used to construct a simulated lens system.

Training a CNN on a simulated lens system and on the unlensed spectrum of a quasar.

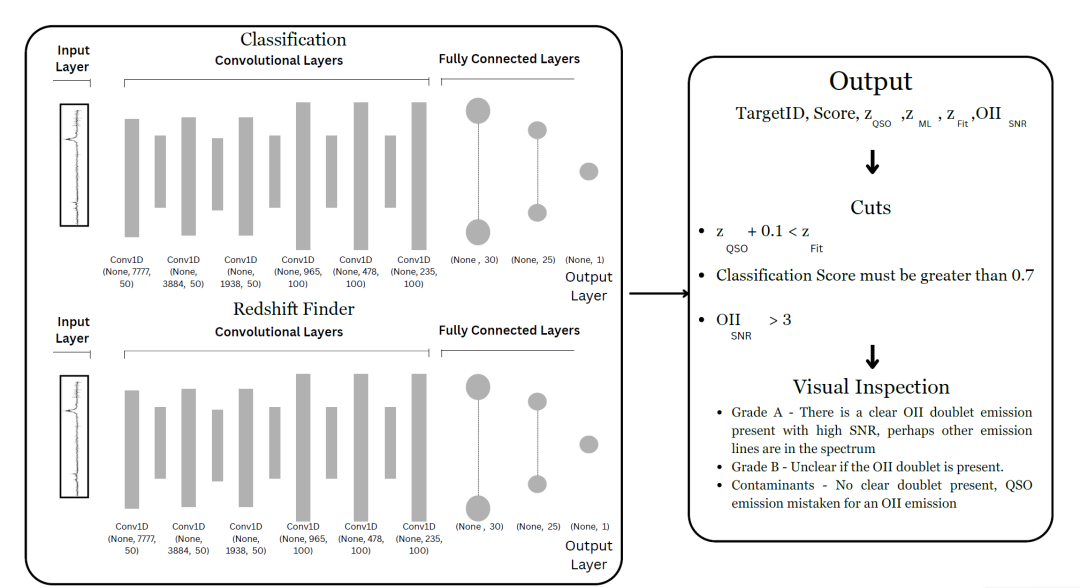

Construct a model that can successfully identify quasars that act as strong gravitational lensing objects.The key is to train the model using labeled datasets so that it can distinguish the features of quasar spectra that act as lenses from those of non-lens quasar spectra.The following diagram illustrates the complete training process:

Researchers used the spectra of quasars (QSOs) and emission line galaxies (ELGs) observed by DESI to train a convolutional neural network (CNN) on simulated lensing systems (positive samples) and unlensed quasar spectra (negative samples). The ratio of positive to negative samples in the training set was 10% to 90%.

① Training set construction and simulated lens system

Although simulated spectra can be used to generate training sets for QSO and ELG,However, this study aims to preserve the inherent characteristics of the DESI spectrum.These characteristics are caused by instruments, observation conditions, or the celestial bodies themselves. Due to various reasons (such as spectral line broadening, luminosity differences, etc.), the [OII] spectral lines of QSO and ELG exhibit high diversity. Therefore, researchers directly used observational data obtained from DR1 to train neural networks, thereby matching the overall survey properties of QSO and ELG in their construction.

However, QSOs acting as strong lenses are extremely rare. In the SDSS dataset, only 12 out of 297,301 quasars are candidates, and within the redshift range considered in this study, the DESI DR1 dataset contains only one. Therefore, the positive samples in this study, i.e., QSO lenses, are...It is necessary to construct a training set containing a sufficient number of positive and negative samples by superimposing the spectrum of the real QSO with the spectrum of the high redshift ELG.

② Training and Architecture of CNN Classifiers

The classifier network consists of six convolutional layers (50 filters in the first three layers and 100 filters in the last three layers) and two fully connected layers (30 and 25 nodes respectively). The convolutional layers are used to extract local features in the spectrum, such as emission lines in quasar and ELG spectra, and finally output a score from 0 to 1. During training, a threshold of 0.5 is set, and samples with a prediction score ≥ 0.5 are identified as lens candidates. Finally, when applying to blind samples, the threshold is optimized to 0.7 to maximize the F1 score.

The CNN architecture consists of six convolutional layers: the first three layers each have 50 filters, and the last three layers each have 100 filters. The first fully connected layer has 30 nodes, and the second layer has 25 nodes, as shown on the left side of the diagram above. The network outputs a score between 0 and 1. During training, the researchers set a threshold of 0.5; any sample with a predicted score greater than or equal to 0.5 was identified as a lens by the neural network.

During training, the Adam optimizer was used with exponential learning rate decay, decreasing the learning rate by a factor of 0.95 every 500 steps. Training was performed using TensorFlow, and scikit-learn was used for training set partitioning, confusion matrix calculation, and metric computation.

Subsequently, the researchers trained two CNNs using training samples from Phase 1 and Phase 2, respectively:The CNN trained in Phase 1 is used for Phase 1 blind samples, and the CNN trained in Phase 2 is used for Phase 2 blind samples.After training, the model evaluates its classification performance on test samples and adjusts the threshold based on the F1 score, which combines true positives (TP), false positives (FP), and false negatives (FN). The highest F1 score in both stages corresponds to a threshold of 0.7.

Finally, each network is applied to blind samples (observed quasars not seen by the model) to generate the first list of lens candidates.

③ Redshift prediction

The redshift of the foreground QSO in quasar spectra is easy to measure, but the redshift of the background ELG is difficult to obtain directly. The research team used two methods to compare performance:

* Redrock:The spectrum was fitted using a PCA template and a grid search was performed to minimize the χ².

* Redshift CNN regression model:It adopts a CNN structure similar to that of a classifier, but the output is a continuous redshift value, and it is trained by mean squared error (MSE).

Building upon the redshift predictions made by CNN, the research team further refined the redshift predictions by performing local double Gaussian fitting on the [OII] biline within the range of Δz=0.1, while simultaneously calculating the signal-to-noise ratio (SNR) to screen high-quality candidates.

Results Showcase: Seven candidate objects for strong lenses discovered

① CNN classifier performance: Excellent performance on both training and validation sets.

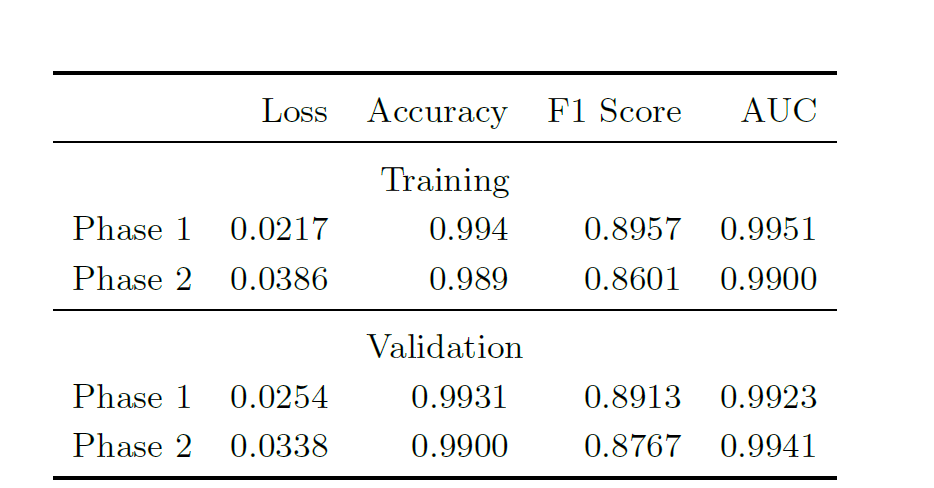

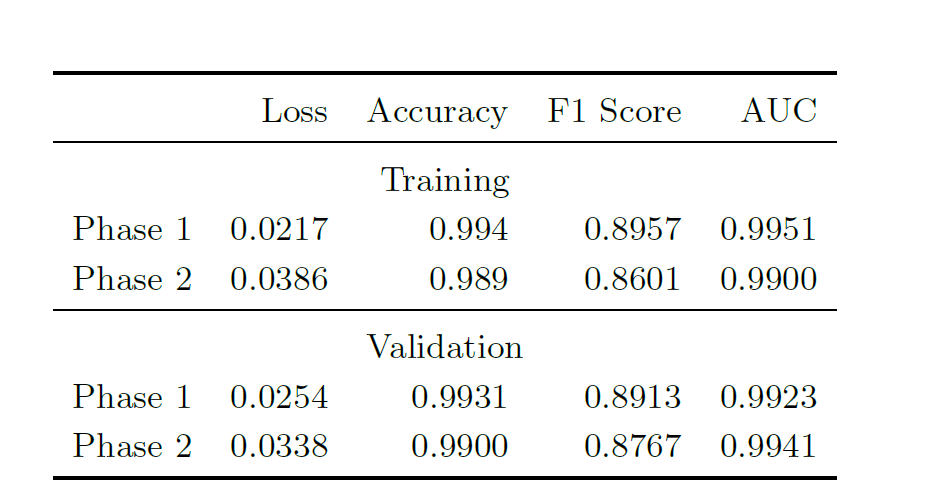

The performance metrics of the CNN classifier applied to the training and validation sets of Phase 1 and Phase 2 are shown in the table below:

The results show that the CNN classifier performs well on both the training and validation sets, and both the F1 score and AUC metric indicate that the model can effectively balance precision and recall.

② Redshift measurement performance: Across all SNR ranges, the CNN redshift measurement device significantly outperforms the Redrock device.

In the test samples,Researchers sorted each object by signal-to-noise ratio (SNR) of high redshift ELG to observe the performance of CNN and Redrock across different SNR ranges of [OII] emission features.They divided the samples into three groups based on percentiles: low SNR (3 ≤ SNR < 7.52), medium SNR (7.52 ≤ SNR < 16.63), and high SNR (SNR ≥ 16.63).

The results show:

* High SNR:The CNN recovered a source redshift of 100% within Δz = 0.1, which was 99.48% after Gaussian fitting and 51.04% after Redrock.

* SNR in China:CNN has a TP3T of 99.481, Gaussian fitting has a TP3T of 1001, and Redrock has a TP3T of 37.701.

* Low SNR:The TP3T for CNN is 100.001, for Gaussian fitting it is 96.881, and for Redrock it is 29.171.

In summary,Across all SNR ranges, the CNN redshift measure combined with Gaussian fitting significantly outperformed Redrock in recovering the background ELG redshift.Even with significant skylines and residual noise in the infrared channels (even with masking), CNNs still outperform the standard Redrock approach. Gaussian fitting achieves near-accuracy in the medium SNR range, but performs poorly at very low SNRs, where pure CNN methods are superior.

③ Application to blind samples: Identification of 7 Class A high-priority lens candidates

After applying the trained CNN to 812,118 quasar spectra, a total of 494 candidates were selected. Through manual visual inspection combined with SNR and redshift information, 7 high-priority (Grade A) lens candidates were finally confirmed, as shown in the table below:

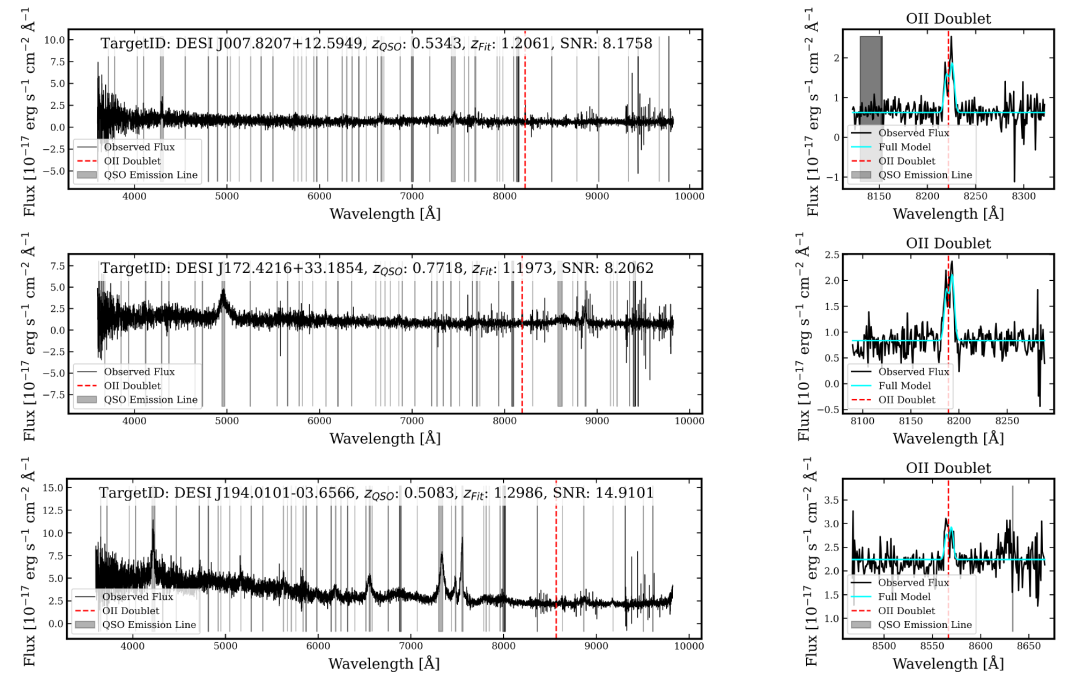

All seven Class A candidates appear to show strong [OII] doublets at redshifts higher than QSO, as shown in the figure below; four of these candidates also show [OIII] λ 4959˚A and Hβ lines at the same redshift.

Deep learning is reshaping the paradigm of astronomical research.

Over the past decade, AI, especially deep learning, has been rapidly reshaping the research paradigm in astronomy. From data acquisition and feature extraction to the scientific discovery process, AI's role in astronomy has evolved from an "auxiliary tool" to a core driving force for cutting-edge breakthroughs. The fundamental reason behind this is:Astronomy is entering an era of unprecedented data explosion.

Large-scale sky surveys (such as DESI, LSST, and Euclid) generate petabytes of data annually, far exceeding the processing capabilities of traditional manual analysis and classical algorithms. Deep learning models excel at automatically extracting complex patterns from massive amounts of observational data, making them ideal for processing spectral, image, and time-series data.

As one of the typical representativesIn November 2025, a team from more than ten research institutions worldwide, including the University of California, Berkeley, the University of Cambridge, and the University of Oxford, jointly launched...AION-1: The first large-scale multimodal foundational model family for astronomy(Astronomical Omni-modal Network)By integrating and modeling heterogeneous observational information such as images, spectra, and star catalog data through a unified early fusion backbone network, AION-1 not only performs excellently in zero-shot scenarios, but its linear detection accuracy is also comparable to models specifically trained for specific tasks. By systematically addressing core challenges such as data heterogeneity, noise, and instrument diversity, AION-1 provides a feasible multimodal modeling paradigm for astronomy and other scientific fields.

Paper Title:AION-1: Omnimodal Foundation Model for Astronomical Sciences

Paper address:https://openreview.net/forum?id=6gJ2ZykQ5W

In the field of celestial object classification, deep learning has become a star technology. Whether it's galaxy morphology classification, supernova identification, or strong gravitational lensing search,Both CNN and Transformer architectures can find key features related to physical processes in high-dimensional, unstructured data, achieving speed and consistency far exceeding those of manual methods.

For example,A team led by Dr. Feng Haicheng from the Yunnan Astronomical Observatory of the Chinese Academy of Sciences, in collaboration with Dr. Li Rui from Zhengzhou University and Professor Nicola R. Napolitano from the University of Naples Federico II, Italy,A multimodal neural network model was proposed, innovatively integrating celestial morphology features with SED information to achieve high-precision automatic identification of celestial objects such as stars, quasars, and galaxies. This method has been applied to a 1,350 square degree sky area in the fifth data release of the European Southern Observatory's KiDS project, completing the classification of over 27 million r-band objects brighter than magnitude 23.

Paper Title:Morpho-photometric Classification of KiDS DR5 Sources Based on Neural Networks: A Comprehensive Star–Quasar–Galaxy Catalog

Paper address:https://iopscience.iop.org/article/10.3847/1538-4365/adde5a

Overall, AI is not simply replacing traditional astronomical methods, but is constantly driving the upgrading of scientific research paradigms: freeing astronomers from tedious data processing so they can focus on fundamental physical questions; preventing rare celestial bodies from being overwhelmed by massive amounts of data; and enabling a faster and deeper understanding of the structure and evolution of the universe.

References:

1.https://arxiv.org/abs/2511.02009

2.https://phys.org/news/2025-11-machine-quasars-lenses.html

3.https://www.cpsjournals.cn/data/article/wl/preview/pdf/10.7693/wl20250701.pdf

4.https://mp.weixin.qq.com/s/6zlnE5-fIw21TQeg1QPPnQ

5.https://www.cas.cn/syky/202507/t20250711_5076040.shtml