A Neural Attention Model for Abstractive Sentence Summarization

A Neural Attention Model for Abstractive Sentence Summarization

Alexander M. Rush Sumit Chopra Jason Weston

Abstract

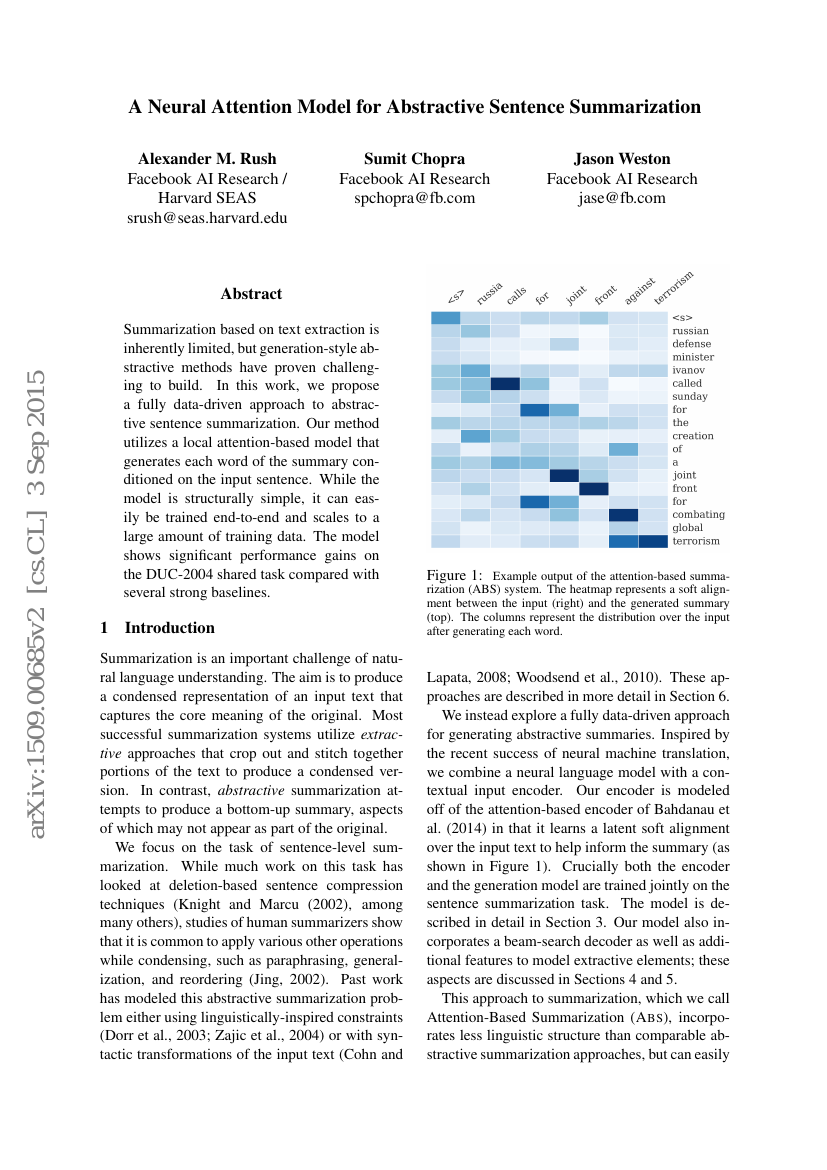

Summarization based on text extraction is inherently limited, but generation-style abstractive methods have proven challenging to build. In this work, we propose a fully data-driven approach to abstractive sentence summarization. Our method utilizes a local attention-based model that generates each word of the summary conditioned on the input sentence. While the model is structurally simple, it can easily be trained end-to-end and scales to a large amount of training data. The model shows significant performance gains on the DUC-2004 shared task compared with several strong baselines.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| extractive-text-summarization-on-duc-2004 | Abs | ROUGE-1: 26.55 ROUGE-2: 7.06 ROUGE-L: 22.05 |

| text-summarization-on-duc-2004-task-1 | ABS | ROUGE-L: 22.05 |

| text-summarization-on-duc-2004-task-1 | Abs+ | ROUGE-1: 28.18 ROUGE-2: 8.49 ROUGE-L: 23.81 |

| text-summarization-on-gigaword | Abs+ | ROUGE-1: 31 |

| text-summarization-on-gigaword | Abs | ROUGE-1: 30.88 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.