Khalil Mrini Franck Dernoncourt Quan Tran Trung Bui Walter Chang Ndapa Nakashole

Abstract

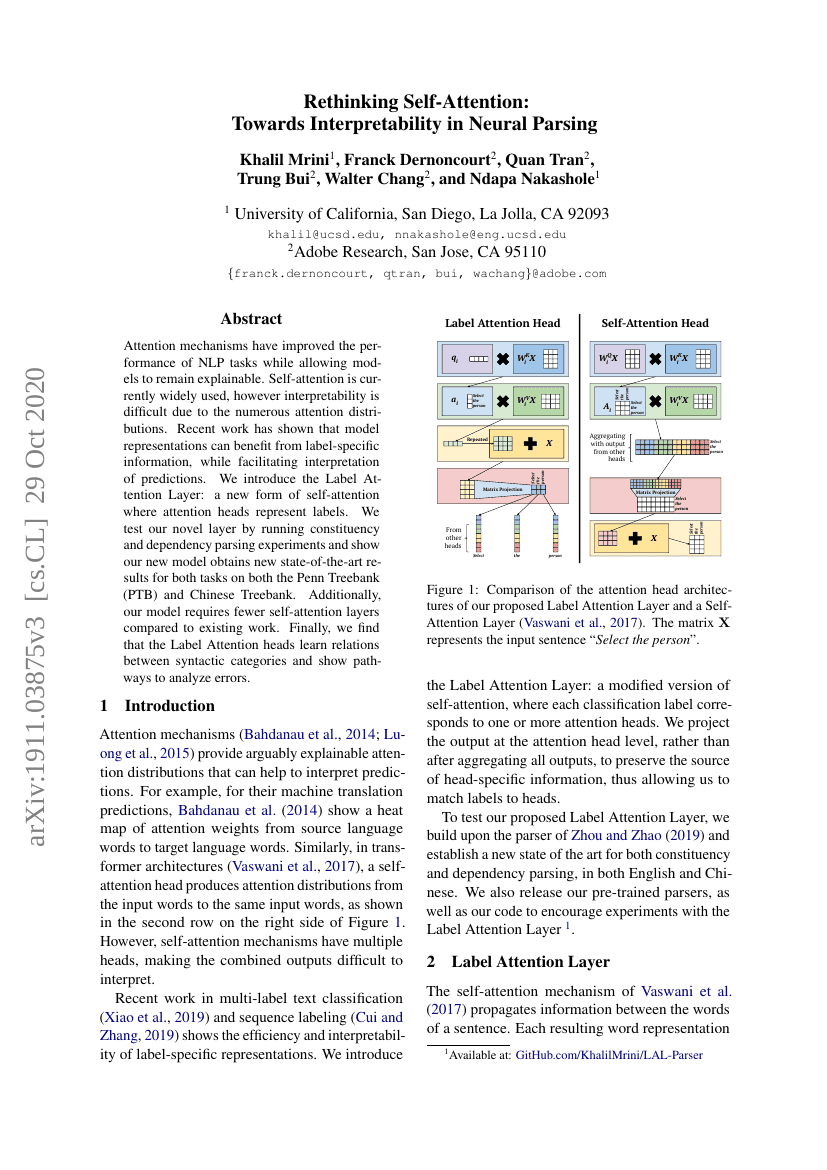

Attention mechanisms have improved the performance of NLP tasks while allowing models to remain explainable. Self-attention is currently widely used, however interpretability is difficult due to the numerous attention distributions. Recent work has shown that model representations can benefit from label-specific information, while facilitating interpretation of predictions. We introduce the Label Attention Layer: a new form of self-attention where attention heads represent labels. We test our novel layer by running constituency and dependency parsing experiments and show our new model obtains new state-of-the-art results for both tasks on both the Penn Treebank (PTB) and Chinese Treebank. Additionally, our model requires fewer self-attention layers compared to existing work. Finally, we find that the Label Attention heads learn relations between syntactic categories and show pathways to analyze errors.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| constituency-parsing-on-penn-treebank | Label Attention Layer + HPSG + XLNet | F1 score: 96.38 |

| dependency-parsing-on-penn-treebank | Label Attention Layer + HPSG + XLNet | LAS: 96.26 POS: 97.3 UAS: 97.42 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.