Zihao Ye Qipeng Guo Quan Gan Xipeng Qiu Zheng Zhang

Abstract

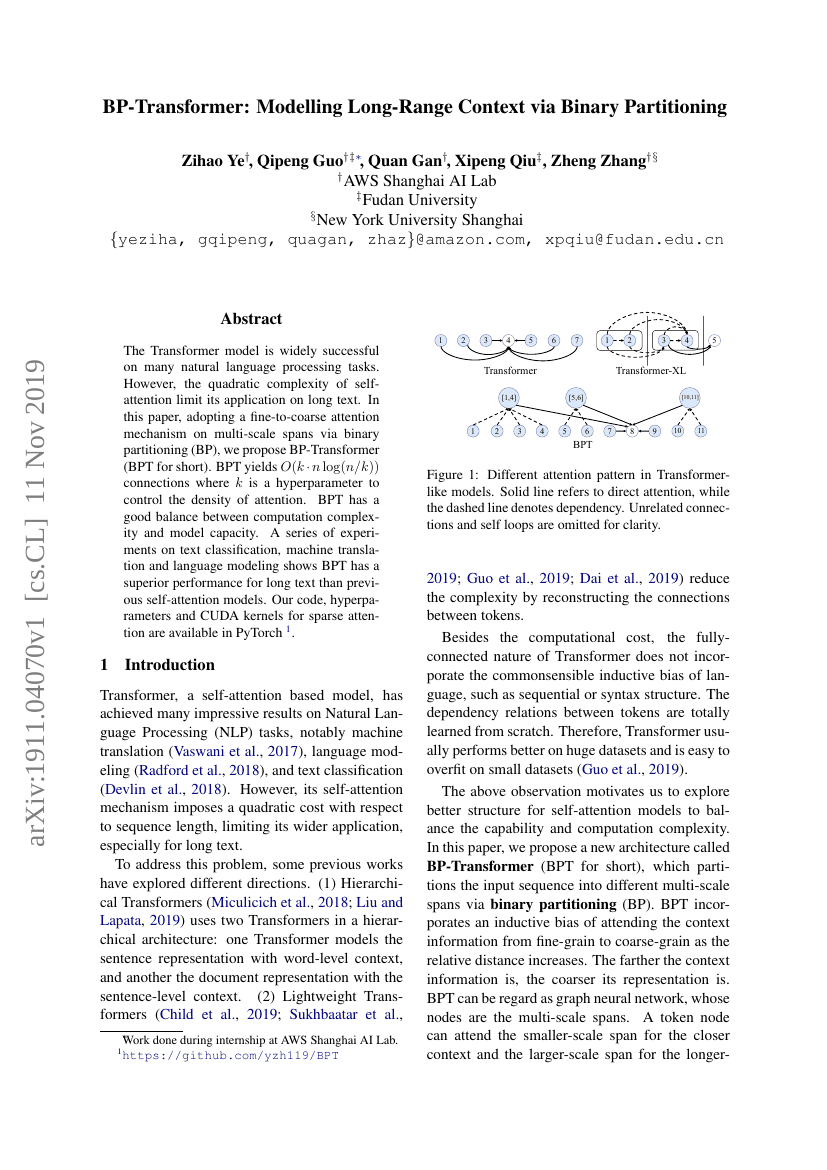

The Transformer model is widely successful on many natural language processing tasks. However, the quadratic complexity of self-attention limit its application on long text. In this paper, adopting a fine-to-coarse attention mechanism on multi-scale spans via binary partitioning (BP), we propose BP-Transformer (BPT for short). BPT yields O(k⋅nlog(n/k)) connections where k is a hyperparameter to control the density of attention. BPT has a good balance between computation complexity and model capacity. A series of experiments on text classification, machine translation and language modeling shows BPT has a superior performance for long text than previous self-attention models. Our code, hyperparameters and CUDA kernels for sparse attention are available in PyTorch.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| language-modelling-on-enwiki8 | BP-Transformer (12 layers) | Bit per Character (BPC): 1.02 Number of params: 38M |

| language-modelling-on-text8 | BP-Transformer - 12 Layers | Bit per Character (BPC): 1.11 |

| machine-translation-on-iwslt2015-chinese | BP-Transformer | BLEU: 19.84 |

| sentiment-analysis-on-imdb | BP-Transformer + GloVe | Accuracy: 92.12 |

| sentiment-analysis-on-sst-5-fine-grained | BP-Transformer + GloVe | Accuracy: 52.71 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.