Zhou Yu Yuhao Cui Jun Yu Meng Wang Dacheng Tao Qi Tian

Abstract

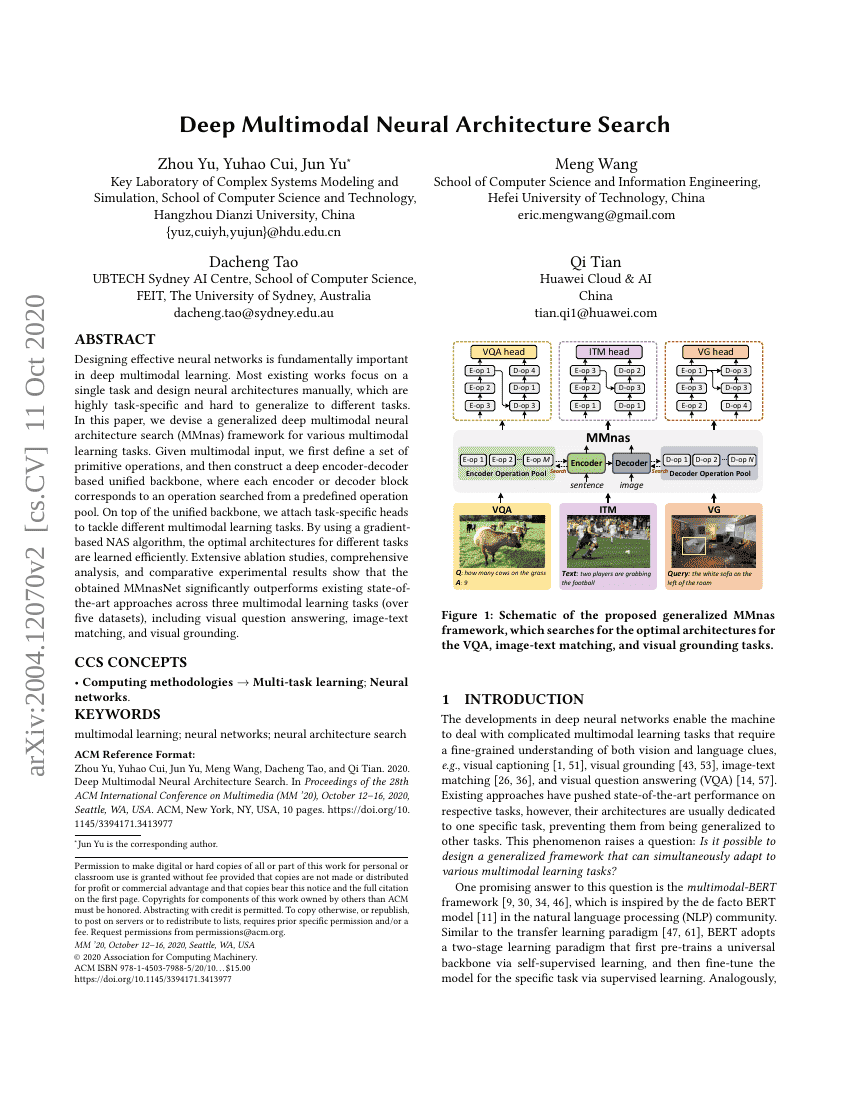

Designing effective neural networks is fundamentally important in deep multimodal learning. Most existing works focus on a single task and design neural architectures manually, which are highly task-specific and hard to generalize to different tasks. In this paper, we devise a generalized deep multimodal neural architecture search (MMnas) framework for various multimodal learning tasks. Given multimodal input, we first define a set of primitive operations, and then construct a deep encoder-decoder based unified backbone, where each encoder or decoder block corresponds to an operation searched from a predefined operation pool. On top of the unified backbone, we attach task-specific heads to tackle different multimodal learning tasks. By using a gradient-based NAS algorithm, the optimal architectures for different tasks are learned efficiently. Extensive ablation studies, comprehensive analysis, and comparative experimental results show that the obtained MMnasNet significantly outperforms existing state-of-the-art approaches across three multimodal learning tasks (over five datasets), including visual question answering, image-text matching, and visual grounding.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| visual-question-answering-on-vqa-v2-test-std | Single, w/o VLP | number: 58.62 other: 63.78 overall: 73.86 yes/no: 89.46 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.