Hanting Chen Yunhe Wang Tianyu Guo Chang Xu Yiping Deng Zhenhua Liu Siwei Ma Chunjing Xu Chao Xu Wen Gao

Abstract

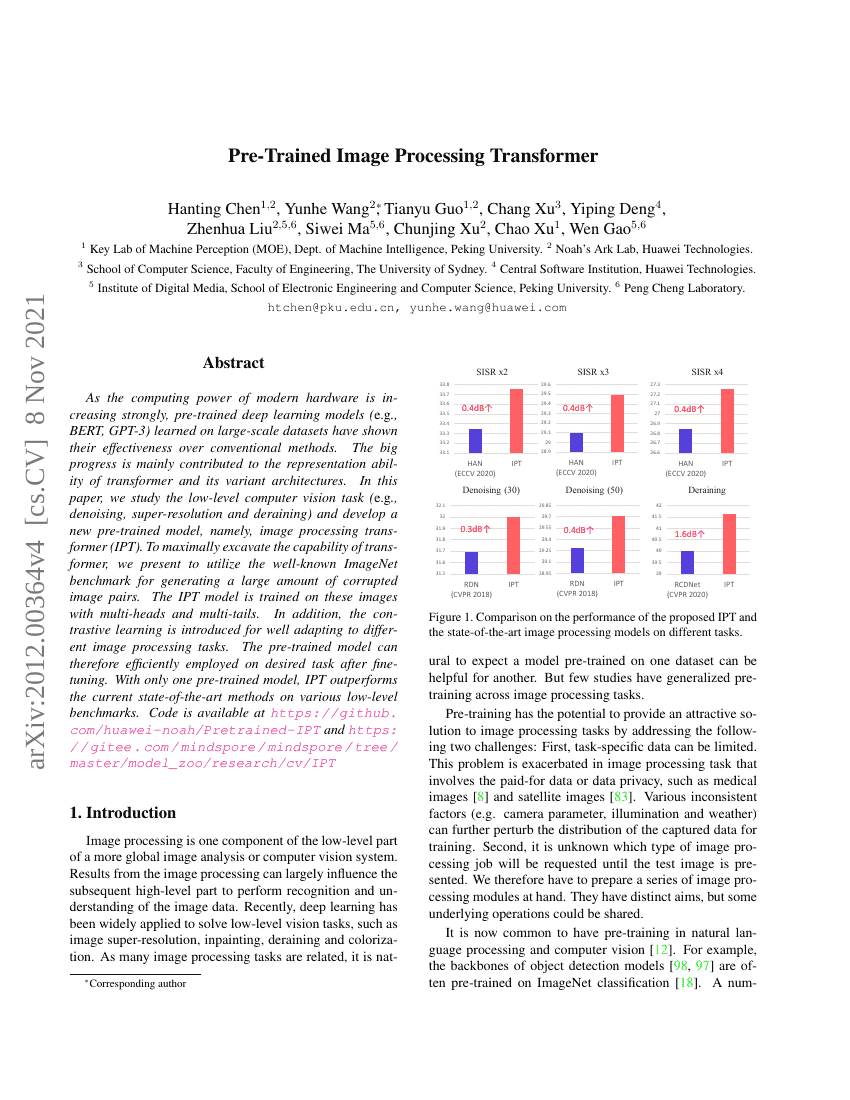

As the computing power of modern hardware is increasing strongly, pre-traineddeep learning models (e.g., BERT, GPT-3) learned on large-scale datasets haveshown their effectiveness over conventional methods. The big progress is mainlycontributed to the representation ability of transformer and its variantarchitectures. In this paper, we study the low-level computer vision task(e.g., denoising, super-resolution and deraining) and develop a new pre-trainedmodel, namely, image processing transformer (IPT). To maximally excavate thecapability of transformer, we present to utilize the well-known ImageNetbenchmark for generating a large amount of corrupted image pairs. The IPT modelis trained on these images with multi-heads and multi-tails. In addition, thecontrastive learning is introduced for well adapting to different imageprocessing tasks. The pre-trained model can therefore efficiently employed ondesired task after fine-tuning. With only one pre-trained model, IPToutperforms the current state-of-the-art methods on various low-levelbenchmarks. Code is available at https://github.com/huawei-noah/Pretrained-IPTand https://gitee.com/mindspore/mindspore/tree/master/model_zoo/research/cv/IPT

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| color-image-denoising-on-cbsd68-sigma50 | IPT | PSNR: 29.39 |

| color-image-denoising-on-urban100-sigma50 | IPT | PSNR: 29.71 |

| image-super-resolution-on-bsd100-2x-upscaling | IPT | PSNR: 32.48 |

| image-super-resolution-on-set14-3x-upscaling | IPT | PSNR: 30.85 |

| image-super-resolution-on-urban100-3x | IPT | PSNR: 29.49 |

| single-image-deraining-on-rain100l | IPT | PSNR: 41.62 SSIM: 0.988 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.