LoFTR: Detector-Free Local Feature Matching with Transformers

LoFTR: Detector-Free Local Feature Matching with Transformers

Jiaming Sun extsuperscript{1,2} extsuperscript{*} Zehong Shen extsuperscript{1} extsuperscript{*} Yuang Wang extsuperscript{1} extsuperscript{*} Hujun Bao extsuperscript{1} Xiaowei Zhou extsuperscript{1} extsuperscript{†}

Abstract

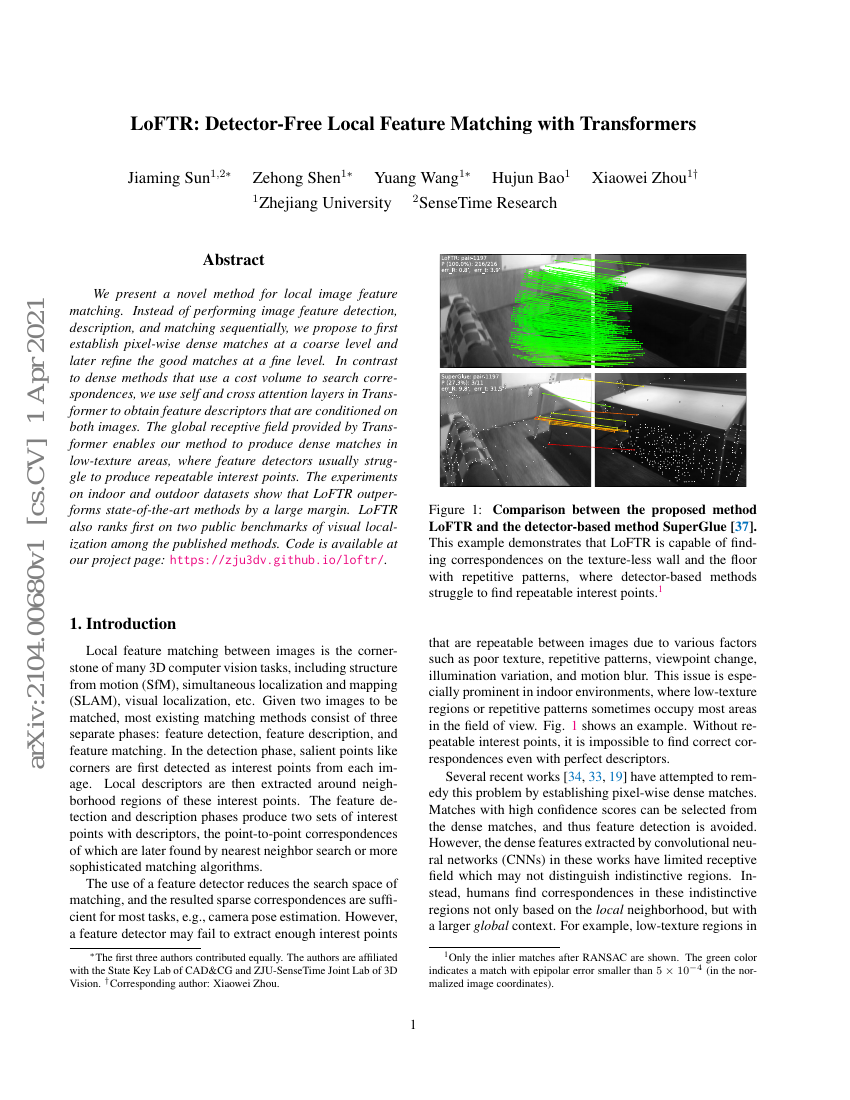

We present a novel method for local image feature matching. Instead ofperforming image feature detection, description, and matching sequentially, wepropose to first establish pixel-wise dense matches at a coarse level and laterrefine the good matches at a fine level. In contrast to dense methods that usea cost volume to search correspondences, we use self and cross attention layersin Transformer to obtain feature descriptors that are conditioned on bothimages. The global receptive field provided by Transformer enables our methodto produce dense matches in low-texture areas, where feature detectors usuallystruggle to produce repeatable interest points. The experiments on indoor andoutdoor datasets show that LoFTR outperforms state-of-the-art methods by alarge margin. LoFTR also ranks first on two public benchmarks of visuallocalization among the published methods.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| image-matching-on-zeb | LoFTR | Mean AUC@5°: 33.1 |

| pose-estimation-on-inloc | LoFTR | [email protected],10°: 47.5 [email protected],10°: 72.2 [email protected],10°: 84.8 [email protected],10°: 54.2 [email protected],10°: 74.8 [email protected],10°: 82.5 |

| visual-localization-on-aachen-day-night-v1-1 | LoFTR |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.