Ming Ding†, Zhuoyi Yang†, Wenyi Hong‡, Wendi Zheng†, Chang Zhou†, Da Yin†, Junyang Lin‡, Xu Zou†, Zhou Shao♠, Hongxia Yang‡, Jie Tang†♠

Abstract

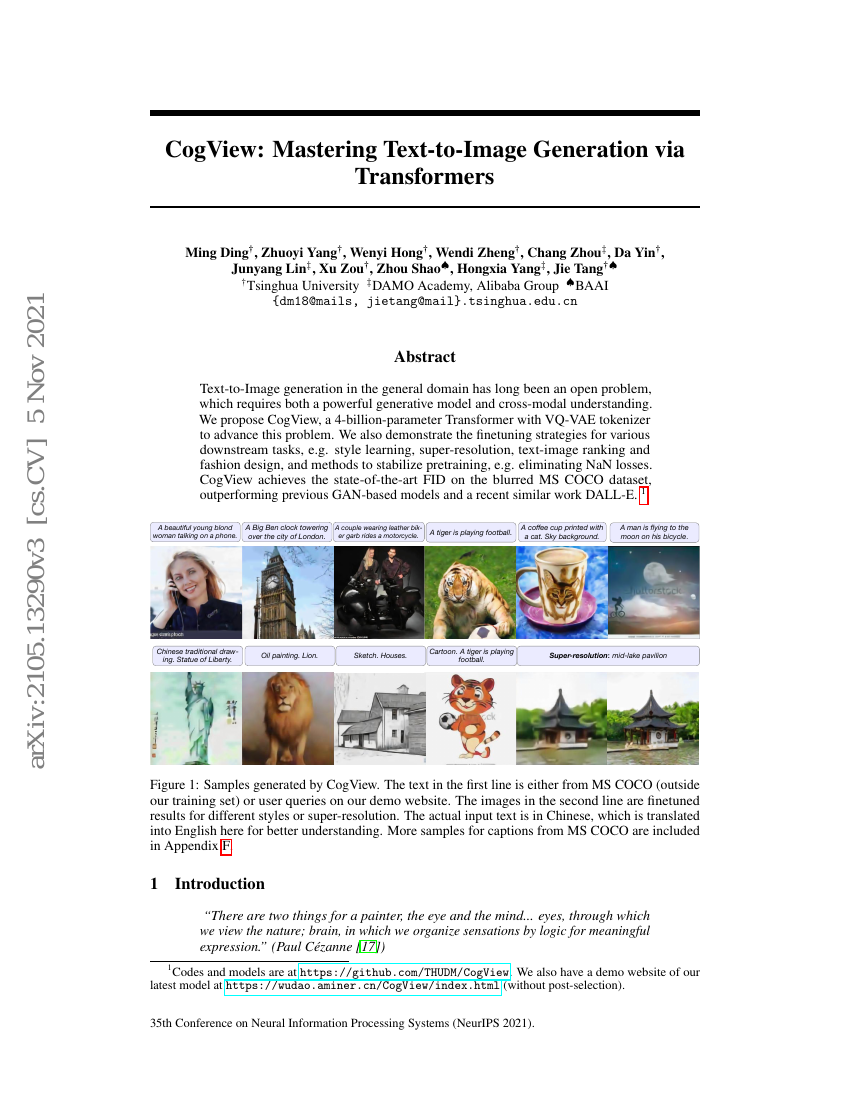

Text-to-Image generation in the general domain has long been an open problem, which requires both a powerful generative model and cross-modal understanding. We propose CogView, a 4-billion-parameter Transformer with VQ-VAE tokenizer to advance this problem. We also demonstrate the finetuning strategies for various downstream tasks, e.g. style learning, super-resolution, text-image ranking and fashion design, and methods to stabilize pretraining, e.g. eliminating NaN losses. CogView achieves the state-of-the-art FID on the blurred MS COCO dataset, outperforming previous GAN-based models and a recent similar work DALL-E.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| text-to-image-generation-on-coco | CogView | FID: 27.1 FID-1: 19.4 FID-2: 13.9 FID-4: 19.4 FID-8: 23.6 Inception score: 18.2 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.