Abstract

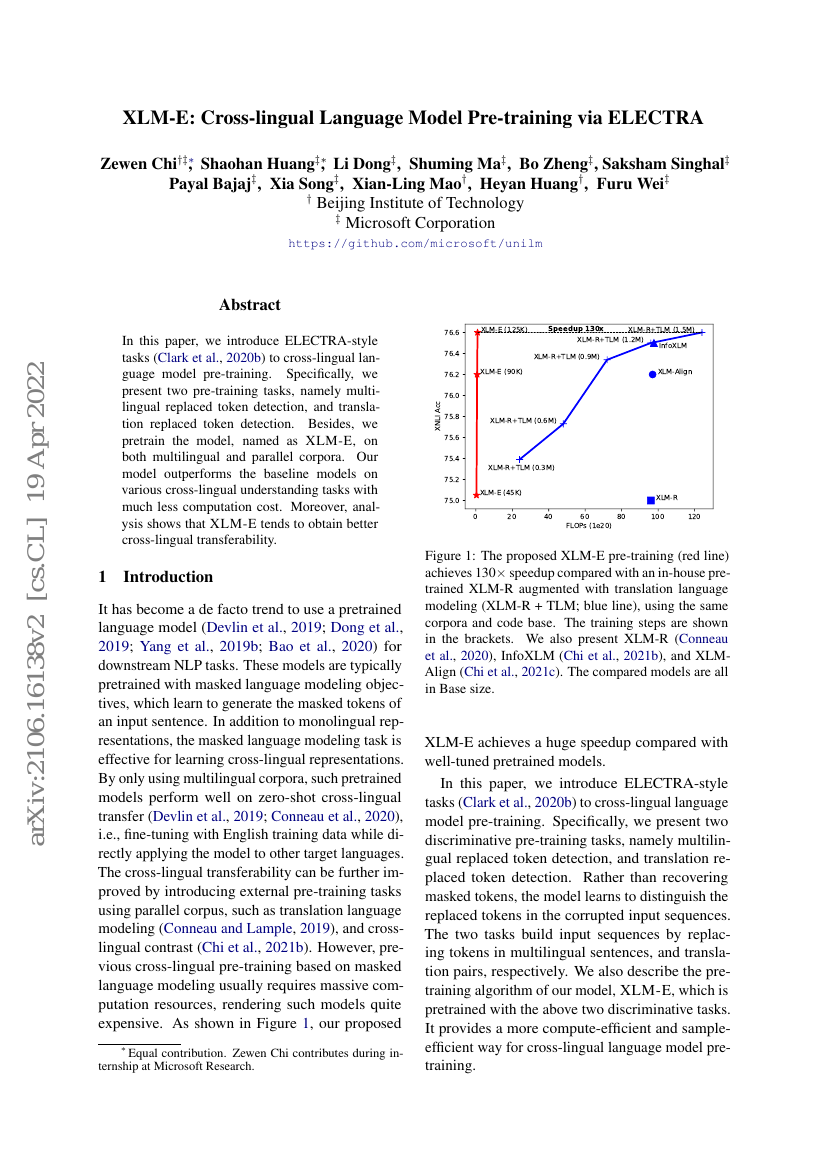

In this paper, we introduce ELECTRA-style tasks to cross-lingual language model pre-training. Specifically, we present two pre-training tasks, namely multilingual replaced token detection, and translation replaced token detection. Besides, we pretrain the model, named as XLM-E, on both multilingual and parallel corpora. Our model outperforms the baseline models on various cross-lingual understanding tasks with much less computation cost. Moreover, analysis shows that XLM-E tends to obtain better cross-lingual transferability.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| zero-shot-cross-lingual-transfer-on-xtreme | Turing ULR v6 | Avg: 85.5 Question Answering: 77.1 Sentence Retrieval: 94.4 Sentence-pair Classification: 91.0 Structured Prediction: 83.8 |

| zero-shot-cross-lingual-transfer-on-xtreme | Turing ULR v5 | Avg: 84.5 Question Answering: 76.3 Sentence Retrieval: 93.7 Sentence-pair Classification: 90.3 Structured Prediction: 81.7 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.