Representation Compensation Networks for Continual Semantic Segmentation

Representation Compensation Networks for Continual Semantic Segmentation

Chang-Bin Zhang Jia-Wen Xiao Xialei Liu Ying-Cong Chen Ming-Ming Cheng

Abstract

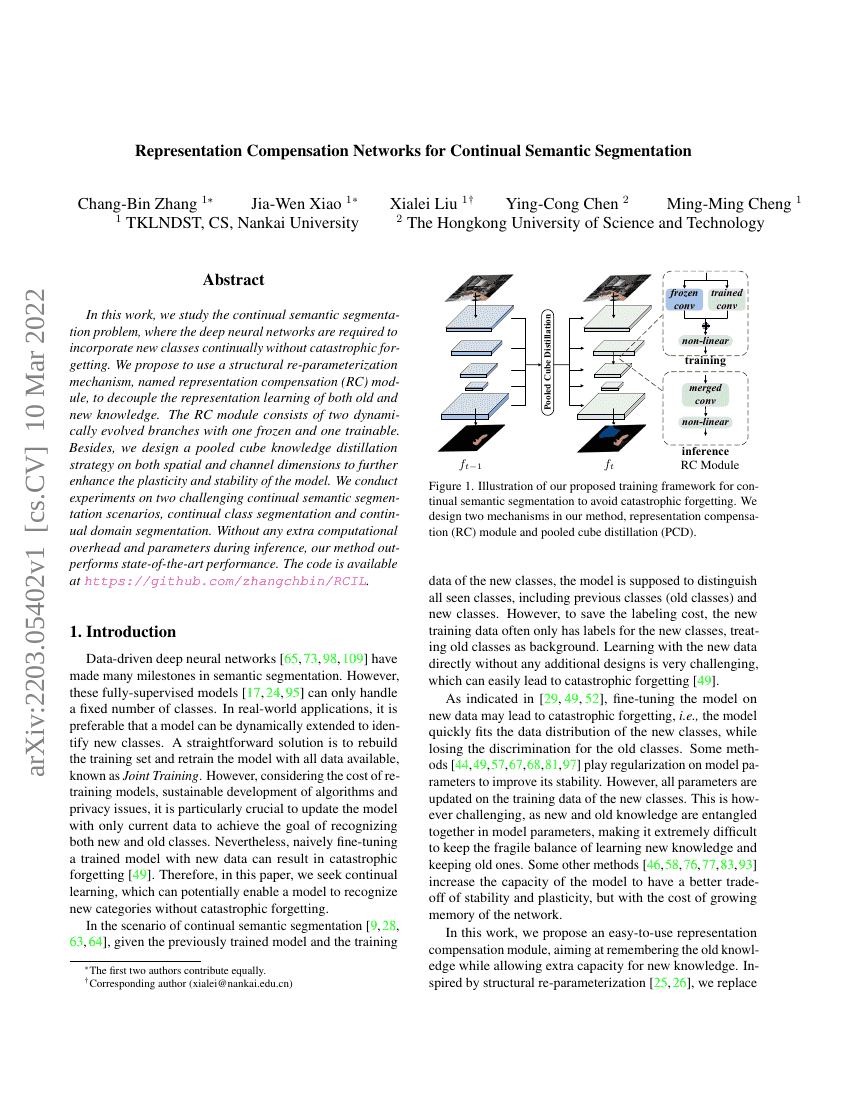

In this work, we study the continual semantic segmentation problem, where thedeep neural networks are required to incorporate new classes continuallywithout catastrophic forgetting. We propose to use a structuralre-parameterization mechanism, named representation compensation (RC) module,to decouple the representation learning of both old and new knowledge. The RCmodule consists of two dynamically evolved branches with one frozen and onetrainable. Besides, we design a pooled cube knowledge distillation strategy onboth spatial and channel dimensions to further enhance the plasticity andstability of the model. We conduct experiments on two challenging continualsemantic segmentation scenarios, continual class segmentation and continualdomain segmentation. Without any extra computational overhead and parametersduring inference, our method outperforms state-of-the-art performance. The codeis available at \url{https://github.com/zhangchbin/RCIL}.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| disjoint-10-1-on-pascal-voc-2012 | RCNet-101 | mIoU: 18.2 |

| disjoint-15-1-on-pascal-voc-2012 | RCNet-101 | mIoU: 54.7 |

| disjoint-15-5-on-pascal-voc-2012 | RCNet-101 | Mean IoU: 67.3 |

| overlapped-10-1-on-pascal-voc-2012 | RCNet-101 | mIoU: 34.3 |

| overlapped-100-10-on-ade20k | RCNet-101 | Mean IoU (test) : 32.1 |

| overlapped-100-5-on-ade20k | RCNet-101 | mIoU: 29.6 |

| overlapped-100-50-on-ade20k | RCNet-101 | mIoU: 34.5 |

| overlapped-15-1-on-pascal-voc-2012 | RCNet-101 | mIoU: 59.4 |

| overlapped-15-5-on-pascal-voc-2012 | RCNet-101 | Mean IoU (val): 72.4 |

| overlapped-50-50-on-ade20k | RCNet-101 | mIoU: 32.5 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.