Arij Bouazizi Adrian Holzbock Ulrich Kressel Klaus Dietmayer Vasileios Belagiannis

Abstract

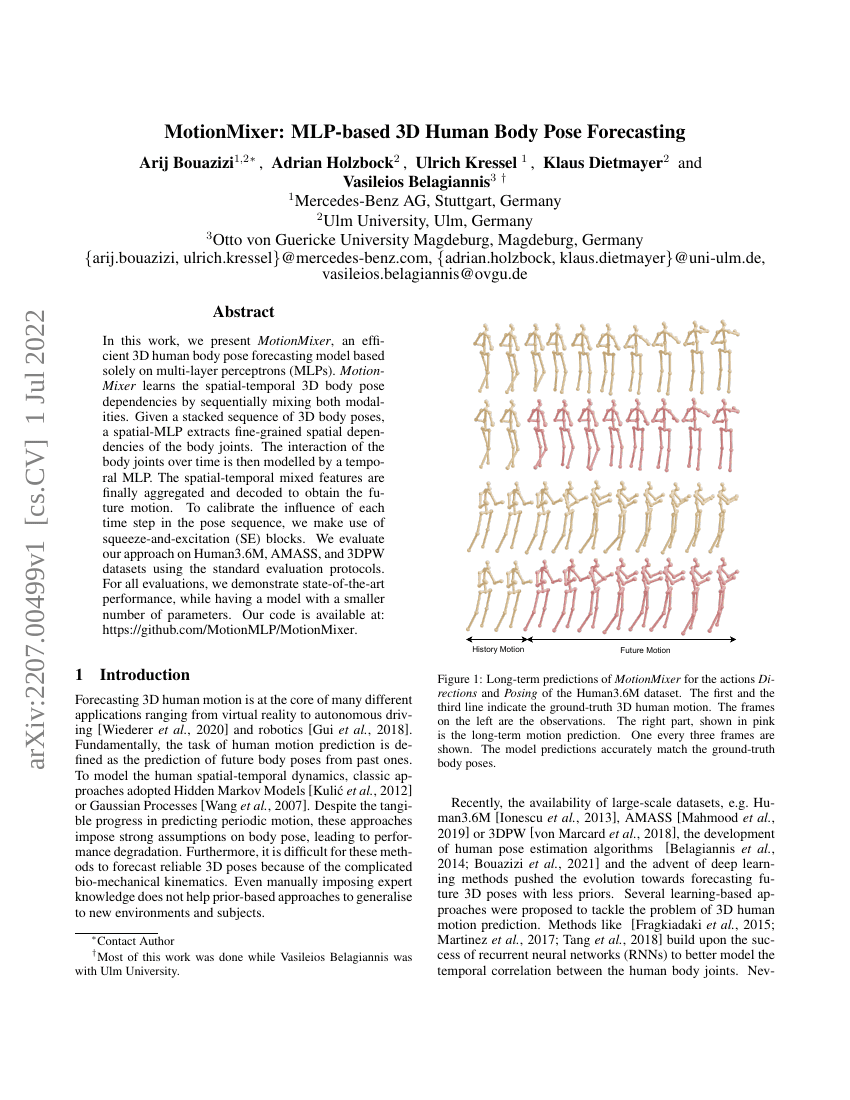

In this work, we present MotionMixer, an efficient 3D human body pose forecasting model based solely on multi-layer perceptrons (MLPs). MotionMixer learns the spatial-temporal 3D body pose dependencies by sequentially mixing both modalities. Given a stacked sequence of 3D body poses, a spatial-MLP extracts fine grained spatial dependencies of the body joints. The interaction of the body joints over time is then modelled by a temporal MLP. The spatial-temporal mixed features are finally aggregated and decoded to obtain the future motion. To calibrate the influence of each time step in the pose sequence, we make use of squeeze-and-excitation (SE) blocks. We evaluate our approach on Human3.6M, AMASS, and 3DPW datasets using the standard evaluation protocols. For all evaluations, we demonstrate state-of-the-art performance, while having a model with a smaller number of parameters. Our code is available at: https://github.com/MotionMLP/MotionMixer

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| human-pose-forecasting-on-human36m | MotionMixer | Average MPJPE (mm) @ 1000 ms: 111.0 Average MPJPE (mm) @ 400ms: 59.3 MAR, walking, 1,000ms: 0.73 MAR, walking, 400ms: 0.58 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.