Hongwei Yi Hualin Liang Yifei Liu Qiong Cao Yandong Wen Timo Bolkart Dacheng Tao Michael J. Black

Abstract

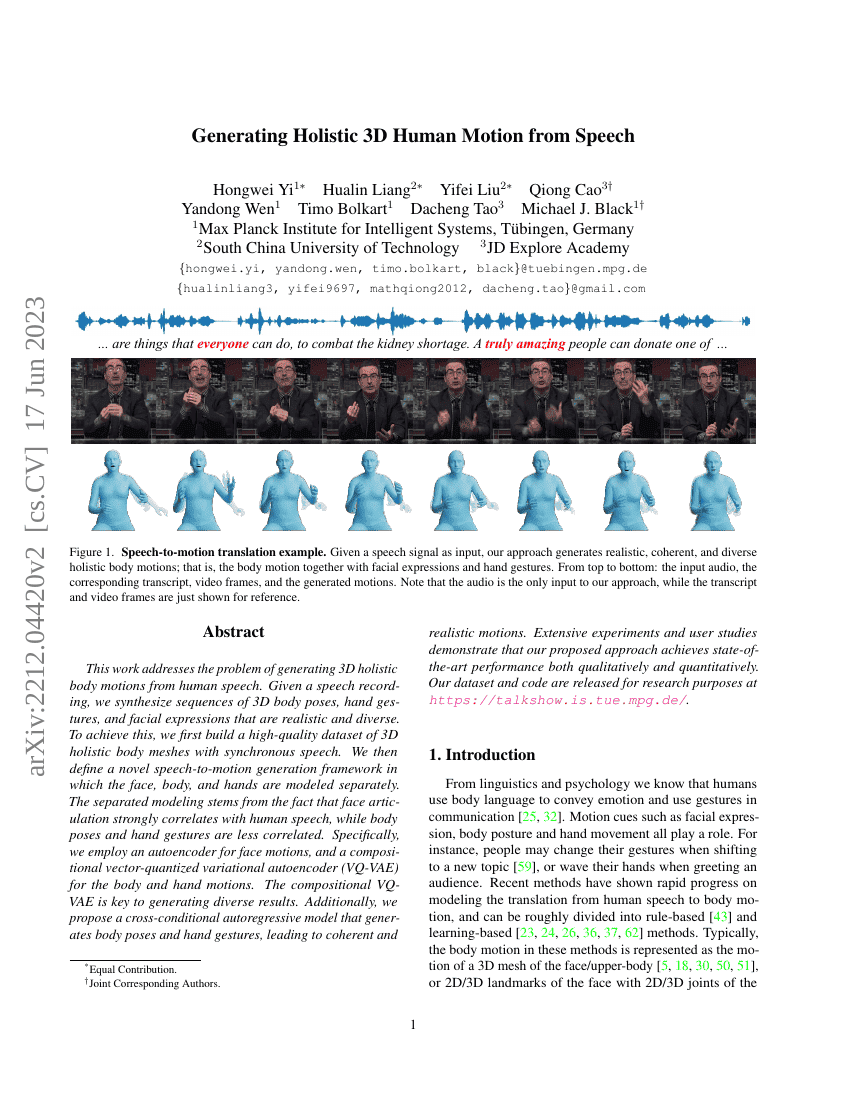

This work addresses the problem of generating 3D holistic body motions fromhuman speech. Given a speech recording, we synthesize sequences of 3D bodyposes, hand gestures, and facial expressions that are realistic and diverse. Toachieve this, we first build a high-quality dataset of 3D holistic body mesheswith synchronous speech. We then define a novel speech-to-motion generationframework in which the face, body, and hands are modeled separately. Theseparated modeling stems from the fact that face articulation stronglycorrelates with human speech, while body poses and hand gestures are lesscorrelated. Specifically, we employ an autoencoder for face motions, and acompositional vector-quantized variational autoencoder (VQ-VAE) for the bodyand hand motions. The compositional VQ-VAE is key to generating diverseresults. Additionally, we propose a cross-conditional autoregressive model thatgenerates body poses and hand gestures, leading to coherent and realisticmotions. Extensive experiments and user studies demonstrate that our proposedapproach achieves state-of-the-art performance both qualitatively andquantitatively. Our novel dataset and code will be released for researchpurposes at https://talkshow.is.tue.mpg.de.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| 3d-face-animation-on-beat2 | TalkShow | MSE: 7.791 |

| gesture-generation-on-beat2 | TalkShow | FGD: 0.6209 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.