Lanqing Guo Siyu Huang Ding Liu Hao Cheng Bihan Wen

Abstract

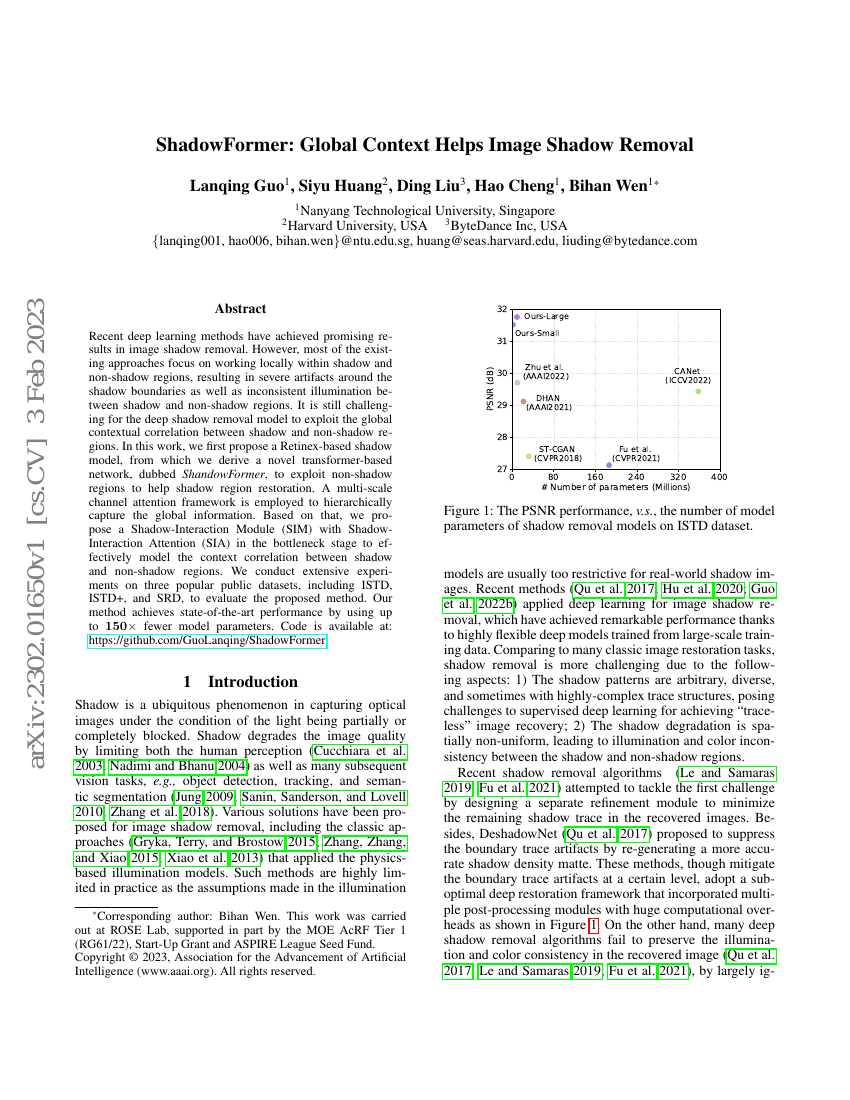

Recent deep learning methods have achieved promising results in image shadowremoval. However, most of the existing approaches focus on working locallywithin shadow and non-shadow regions, resulting in severe artifacts around theshadow boundaries as well as inconsistent illumination between shadow andnon-shadow regions. It is still challenging for the deep shadow removal modelto exploit the global contextual correlation between shadow and non-shadowregions. In this work, we first propose a Retinex-based shadow model, fromwhich we derive a novel transformer-based network, dubbed ShandowFormer, toexploit non-shadow regions to help shadow region restoration. A multi-scalechannel attention framework is employed to hierarchically capture the globalinformation. Based on that, we propose a Shadow-Interaction Module (SIM) withShadow-Interaction Attention (SIA) in the bottleneck stage to effectively modelthe context correlation between shadow and non-shadow regions. We conductextensive experiments on three popular public datasets, including ISTD, ISTD+,and SRD, to evaluate the proposed method. Our method achieves state-of-the-artperformance by using up to 150X fewer model parameters.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| shadow-removal-on-istd | ShadowFormer | MAE: 4.79 |

| shadow-removal-on-istd-1 | ShadowFormer (AAAI 2023) (512x512) | LPIPS: 0.204 PSNR: 28.07 RMSE: 3.06 SSIM: 0.847 |

| shadow-removal-on-istd-1 | ShadowFormer (AAAI 2023) (256x256) | LPIPS: 0.35 PSNR: 26.55 RMSE: 3.45 SSIM: 0.728 |

| shadow-removal-on-srd | ShadowFormer (AAAI 2023) (512x512) | LPIPS: 0.228 PSNR: 25.6 RMSE: 3.9 SSIM: 0.819 |

| shadow-removal-on-srd | ShadowFormer (AAAI 2023) (256x256) | LPIPS: 0.348 PSNR: 24.28 RMSE: 4.44 SSIM: 0.715 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.