Stimulating Diffusion Model for Image Denoising via Adaptive Embedding

and Ensembling

Stimulating Diffusion Model for Image Denoising via Adaptive Embedding and Ensembling

Tong Li Hansen Feng Lizhi Wang, Member, IEEE Lin Zhu, Member, IEEE Zhiwei Xiong, Member, IEEE Hua Huang, Senior Member, IEEE

Abstract

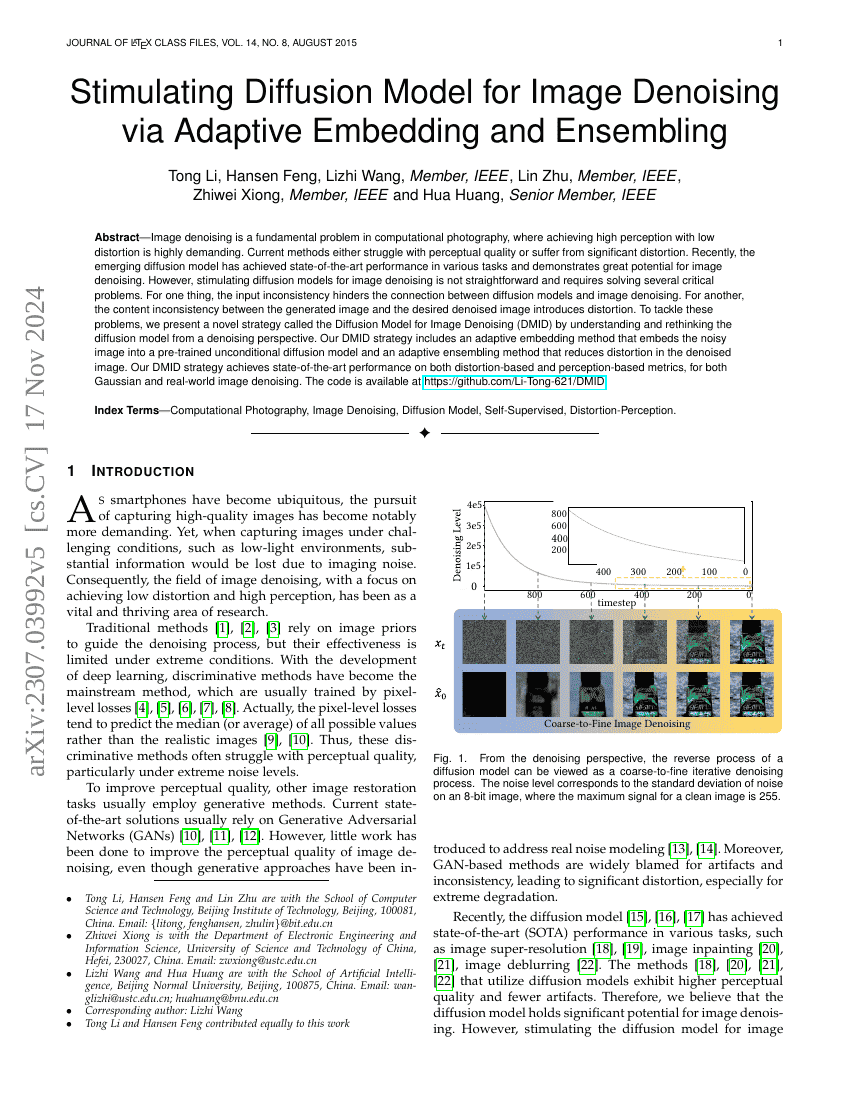

Image denoising is a fundamental problem in computational photography, whereachieving high perception with low distortion is highly demanding. Currentmethods either struggle with perceptual quality or suffer from significantdistortion. Recently, the emerging diffusion model has achievedstate-of-the-art performance in various tasks and demonstrates great potentialfor image denoising. However, stimulating diffusion models for image denoisingis not straightforward and requires solving several critical problems. For onething, the input inconsistency hinders the connection between diffusion modelsand image denoising. For another, the content inconsistency between thegenerated image and the desired denoised image introduces distortion. To tacklethese problems, we present a novel strategy called the Diffusion Model forImage Denoising (DMID) by understanding and rethinking the diffusion model froma denoising perspective. Our DMID strategy includes an adaptive embeddingmethod that embeds the noisy image into a pre-trained unconditional diffusionmodel and an adaptive ensembling method that reduces distortion in the denoisedimage. Our DMID strategy achieves state-of-the-art performance on bothdistortion-based and perception-based metrics, for both Gaussian and real-worldimage denoising.The code is available at https://github.com/Li-Tong-621/DMID.

Code Repositories

Benchmarks

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.