Mathieu Seraphim Alexis Lechervy Florian Yger Luc Brun Olivier Etard

Abstract

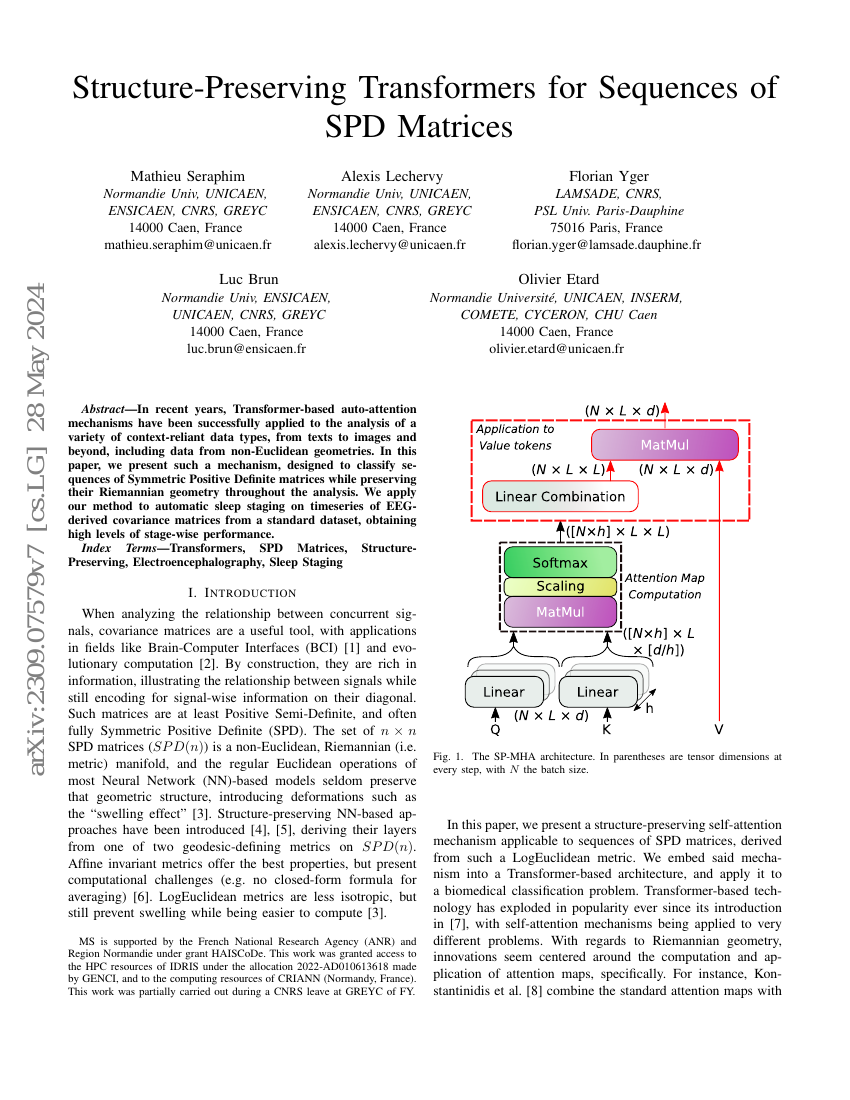

In recent years, Transformer-based auto-attention mechanisms have been successfully applied to the analysis of a variety of context-reliant data types, from texts to images and beyond, including data from non-Euclidean geometries. In this paper, we present such a mechanism, designed to classify sequences of Symmetric Positive Definite matrices while preserving their Riemannian geometry throughout the analysis. We apply our method to automatic sleep staging on timeseries of EEG-derived covariance matrices from a standard dataset, obtaining high levels of stage-wise performance.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| sleep-stage-detection-on-mass-ss3 | SPDTransNet | Macro-F1: 0.8124 Macro-averaged Accuracy: 84.40% |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.