Haotian Liu Chunyuan Li Yuheng Li Yong Jae Lee

Abstract

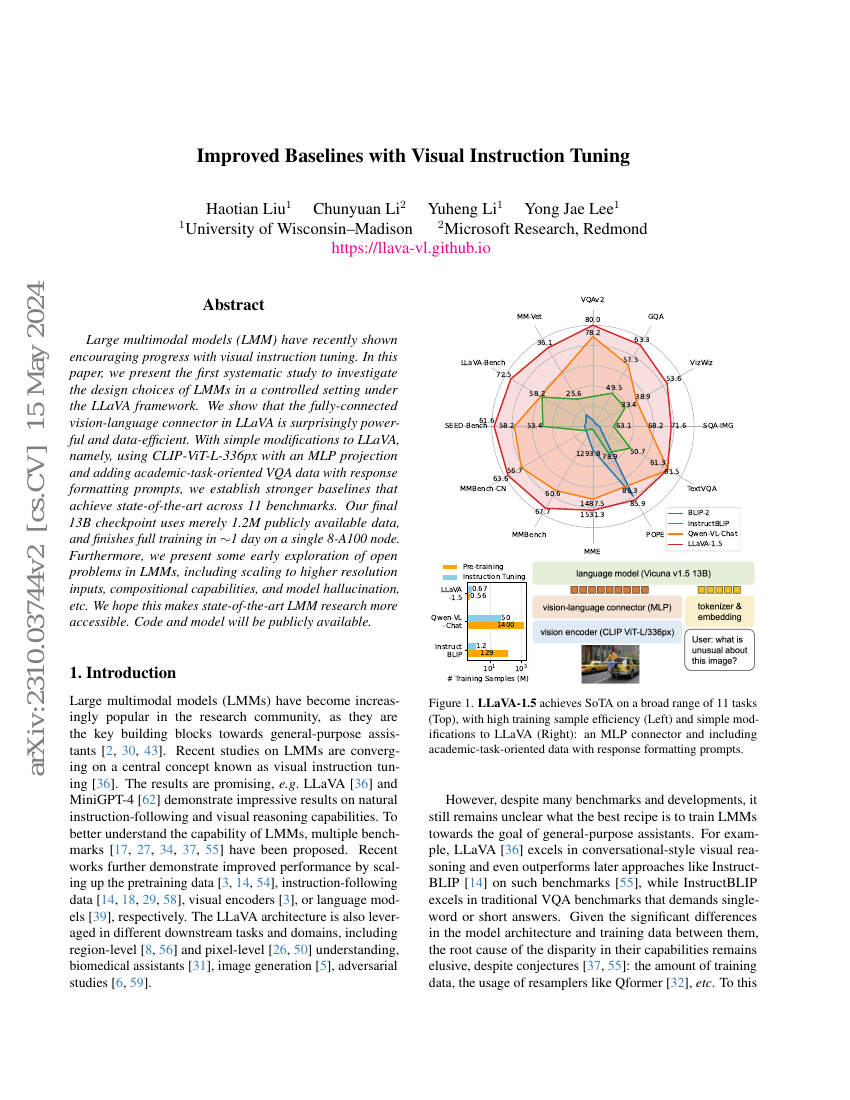

Large multimodal models (LMM) have recently shown encouraging progress with visual instruction tuning. In this note, we show that the fully-connected vision-language cross-modal connector in LLaVA is surprisingly powerful and data-efficient. With simple modifications to LLaVA, namely, using CLIP-ViT-L-336px with an MLP projection and adding academic-task-oriented VQA data with simple response formatting prompts, we establish stronger baselines that achieve state-of-the-art across 11 benchmarks. Our final 13B checkpoint uses merely 1.2M publicly available data, and finishes full training in ~1 day on a single 8-A100 node. We hope this can make state-of-the-art LMM research more accessible. Code and model will be publicly available.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| image-classification-on-coloninst-v1-seen | LLaVA-v1.5 (w/ LoRA, w/o extra data) | Accuray: 92.97 |

| image-classification-on-coloninst-v1-seen | LLaVA-v1.5 (w/ LoRA, w/ extra data) | Accuray: 93.33 |

| image-classification-on-coloninst-v1-unseen | LLaVA-v1.5 (w/ LoRA, w/o extra data) | Accuray: 79.10 |

| image-classification-on-coloninst-v1-unseen | LLaVA-v1.5 (w/ LoRA, w/ extra data) | Accuray: 80.89 |

| referring-expression-generation-on-coloninst | LLaVA-v1.5 (w/ LoRA, w/ extra data) | Accuray: 99.32 |

| referring-expression-generation-on-coloninst | LLaVA-v1.5 (w/ LoRA, w/o extra data) | Accuray: 98.58 |

| referring-expression-generation-on-coloninst-1 | LLaVA-v1.5 (w/ LoRA, w/o extra data) | Accuray: 70.38 |

| referring-expression-generation-on-coloninst-1 | LLaVA-v1.5 (w/ LoRA, w/ extra data) | Accuray: 72.88 |

| spatial-reasoning-on-6-dof-spatialbench | LLaVA-1.5 | Orientation-abs: 25.8 Orientation-rel: 28.3 Position-abs: 24.5 Position-rel: 30.9 Total: 27.2 |

| visual-instruction-following-on-llava-bench | LLaVA-v1.5-13B | avg score: 70.7 |

| visual-instruction-following-on-llava-bench | LLaVA-v1.5-7B | avg score: 63.4 |

| visual-question-answering-on-benchlmm | LLaVA-1.5-13B | GPT-3.5 score: 55.53 |

| visual-question-answering-on-mm-vet | LLaVA-1.5-7B | GPT-4 score: 31.1±0.2 Params: 7B |

| visual-question-answering-on-mm-vet | LLaVA-1.5-13B | GPT-4 score: 36.3±0.2 Params: 13B |

| visual-question-answering-on-mm-vet-v2 | LLaVA-v1.5-13B | GPT-4 score: 33.2±0.1 Params: 13B |

| visual-question-answering-on-mm-vet-v2 | LLaVA-v1.5-7B | GPT-4 score: 28.3±0.2 Params: 7B |

| visual-question-answering-on-vip-bench | LLaVA-1.5-13B (Visual Prompt) | GPT-4 score (bbox): 41.8 GPT-4 score (human): 42.9 |

| visual-question-answering-on-vip-bench | LLaVA-1.5-13B (Coordinates) | GPT-4 score (bbox): 47.1 |

| visual-question-answering-vqa-on-5 | LLaVA-1.5 | Overall Accuracy: 44.5 |

| visual-question-answering-vqa-on-core-mm | LLaVA-1.5 | Abductive: 47.91 Analogical: 24.31 Deductive: 30.94 Overall score: 32.62 Params: 13B |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.