General Object Foundation Model for Images and Videos at Scale

General Object Foundation Model for Images and Videos at Scale

Junfeng Wu Yi Jiang Qihao Liu Zehuan Yuan Xiang Bai Song Bai

Abstract

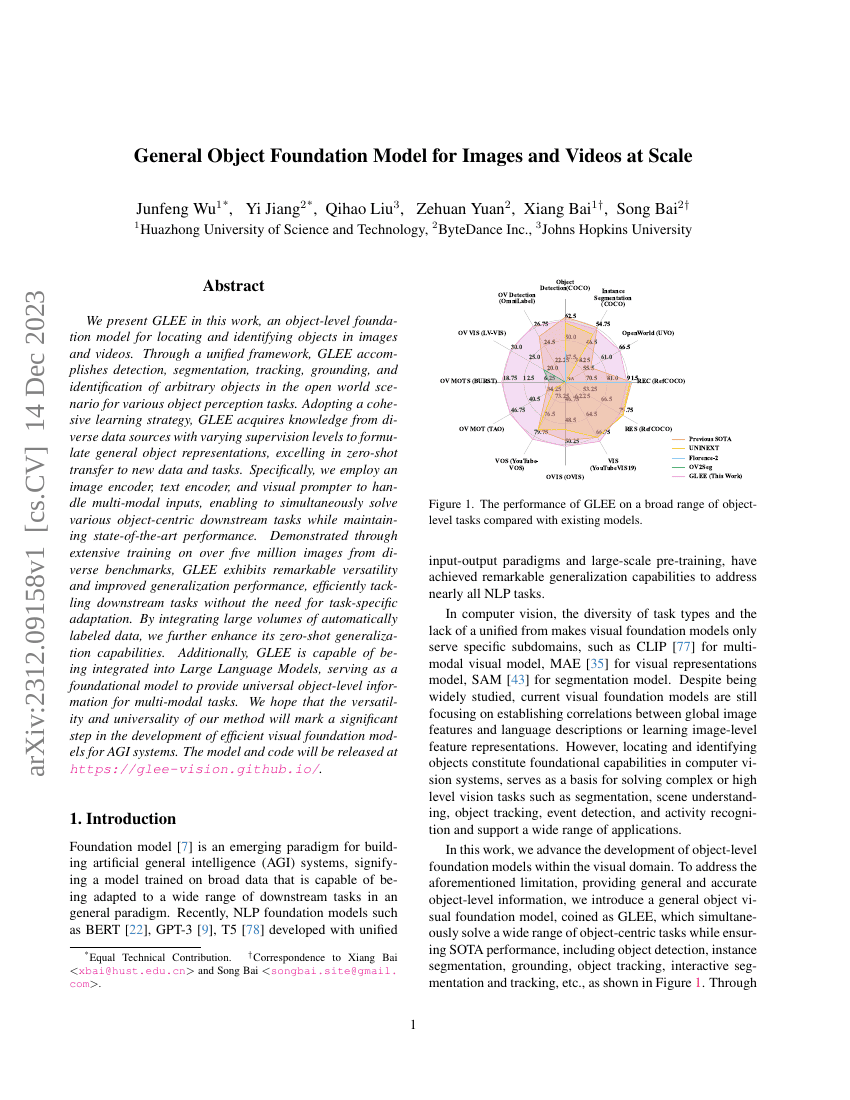

We present GLEE in this work, an object-level foundation model for locatingand identifying objects in images and videos. Through a unified framework, GLEEaccomplishes detection, segmentation, tracking, grounding, and identificationof arbitrary objects in the open world scenario for various object perceptiontasks. Adopting a cohesive learning strategy, GLEE acquires knowledge fromdiverse data sources with varying supervision levels to formulate generalobject representations, excelling in zero-shot transfer to new data and tasks.Specifically, we employ an image encoder, text encoder, and visual prompter tohandle multi-modal inputs, enabling to simultaneously solve variousobject-centric downstream tasks while maintaining state-of-the-art performance.Demonstrated through extensive training on over five million images fromdiverse benchmarks, GLEE exhibits remarkable versatility and improvedgeneralization performance, efficiently tackling downstream tasks without theneed for task-specific adaptation. By integrating large volumes ofautomatically labeled data, we further enhance its zero-shot generalizationcapabilities. Additionally, GLEE is capable of being integrated into LargeLanguage Models, serving as a foundational model to provide universalobject-level information for multi-modal tasks. We hope that the versatilityand universality of our method will mark a significant step in the developmentof efficient visual foundation models for AGI systems. The model and code willbe released at https://glee-vision.github.io .

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| instance-segmentation-on-coco | GLEE-Lite | mask AP: 48.3 |

| instance-segmentation-on-coco | GLEE-Plus | mask AP: 53.3 |

| instance-segmentation-on-coco | GLEE-Pro | mask AP: 54.5 |

| instance-segmentation-on-coco-minival | GLEE-Pro | mask AP: 54.2 |

| instance-segmentation-on-coco-minival | GLEE-Plus | mask AP: 53.0 |

| instance-segmentation-on-coco-minival | GLEE-Lite | mask AP: 48.4 |

| instance-segmentation-on-lvis-v1-0-val | GLEE-Pro | mask AP: 49.9 |

| long-tail-video-object-segmentation-on-burst | GLEE-Lite | HOTA (all): 22.6 HOTA (com): 36.4 HOTA (unc): 19.1 mAP (all): 12.6 mAP (com): 18.9 mAP (unc): 11.0 |

| long-tail-video-object-segmentation-on-burst-1 | GLEE-Lite | HOTA (all): 22.6 HOTA (com): 36.4 HOTA (unc): 19.1 mAP (all): 12.6 mAP (com): 18.9 mAP (unc): 11.0 |

| long-tail-video-object-segmentation-on-burst-1 | GLEE-Pro | HOTA (all): 31.2 HOTA (com): 48.7 HOTA (unc): 26.9 mAP (all): 19.2 mAP (com): 24.8 mAP (unc): 17.7 |

| long-tail-video-object-segmentation-on-burst-1 | GLEE-Plus | HOTA (all): 26.9 HOTA (com): 38.8 HOTA (unc): 23.9 mAP (all): 17.2 mAP (com): 23.7 mAP (unc): 15.5 |

| multi-object-tracking-on-tao | GLEE-Lite | AssocA: 39.9 ClsA: 24.1 LocA: 56.3 TETA: 40.1 |

| multi-object-tracking-on-tao | GLEE-Plus | AssocA: 40.9 ClsA: 30.8 LocA: 52.9 TETA: 41.5 |

| multi-object-tracking-on-tao | GLEE-Pro | AssocA: 46.2 ClsA: 29.1 LocA: 66.2 TETA: 47.2 |

| object-detection-on-coco | GLEE-Lite | box mAP: 54.7 |

| object-detection-on-coco | GLEE-Pro | box mAP: 62.3 |

| object-detection-on-coco | GLEE-Plus | box mAP: 60.6 |

| object-detection-on-coco-minival | GLEE-Pro | box AP: 62.0 |

| object-detection-on-coco-minival | GLEE-Lite | box AP: 55.0 |

| object-detection-on-coco-minival | GLEE-Plus | box AP: 60.4 |

| object-detection-on-lvis-v1-0-val | GLEE-Pro | box AP: 55.7 |

| open-world-instance-segmentation-on-uvo | GLEE-Pro | ARmask: 72.6 |

| referring-expression-segmentation-on-refcoco | GLEE-Pro | Overall IoU: 80.0 |

| referring-expression-segmentation-on-refcoco-3 | GLEE-Pro | Overall IoU: 69.6 |

| referring-expression-segmentation-on-refcoco-6 | GLEE-Pro | IoU: 80.0 |

| referring-expression-segmentation-on-refcocog | GLEE-Pro | Overall IoU: 72.9 |

| referring-expression-segmentation-on-refer-1 | GLEE-Pro | F: 72.9 J: 68.2 Ju0026F: 70.6 |

| referring-video-object-segmentation-on-refer | GLEE-Plus | F: 69.7 J: 65.6 Ju0026F: 67.7 |

| referring-video-object-segmentation-on-refer | GLEE-Pro | F: 72.9 J: 68.2 Ju0026F: 70.6 |

| video-instance-segmentation-on-ovis-1 | GLEE-Pro | AP75: 55.5 mask AP: 50.4 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.