An Open and Comprehensive Pipeline for Unified Object Grounding and

Detection

An Open and Comprehensive Pipeline for Unified Object Grounding and Detection

Xiangyu Zhao Yicheng Chen Shilin Xu Xiangtai Li Xinjiang Wang Yining Li Haian Huang

Abstract

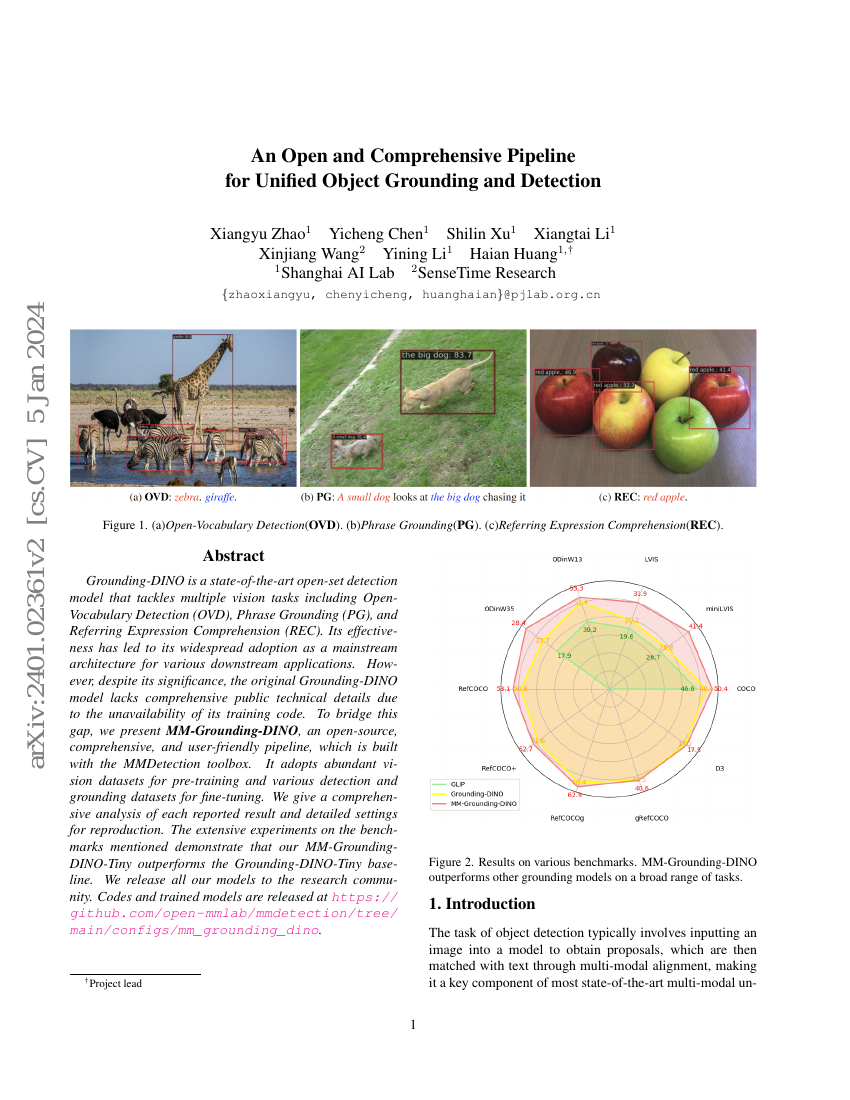

Grounding-DINO is a state-of-the-art open-set detection model that tacklesmultiple vision tasks including Open-Vocabulary Detection (OVD), PhraseGrounding (PG), and Referring Expression Comprehension (REC). Its effectivenesshas led to its widespread adoption as a mainstream architecture for variousdownstream applications. However, despite its significance, the originalGrounding-DINO model lacks comprehensive public technical details due to theunavailability of its training code. To bridge this gap, we presentMM-Grounding-DINO, an open-source, comprehensive, and user-friendly baseline,which is built with the MMDetection toolbox. It adopts abundant vision datasetsfor pre-training and various detection and grounding datasets for fine-tuning.We give a comprehensive analysis of each reported result and detailed settingsfor reproduction. The extensive experiments on the benchmarks mentioneddemonstrate that our MM-Grounding-DINO-Tiny outperforms the Grounding-DINO-Tinybaseline. We release all our models to the research community. Codes andtrained models are released athttps://github.com/open-mmlab/mmdetection/tree/main/configs/mm_grounding_dino.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| described-object-detection-on-description | MM-Grounding-DINO | Intra-scenario ABS mAP: 26.0 Intra-scenario FULL mAP: 22.9 Intra-scenario PRES mAP: 21.9 |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.