Pruning the Unsurprising: Efficient Code Reasoning via First-Token

Surprisal

Pruning the Unsurprising: Efficient Code Reasoning via First-Token Surprisal

Wenhao Zeng Yaoning Wang Chao Hu Yuling Shi Chengcheng Wan Hongyu Zhang Xiaodong Gu

Abstract

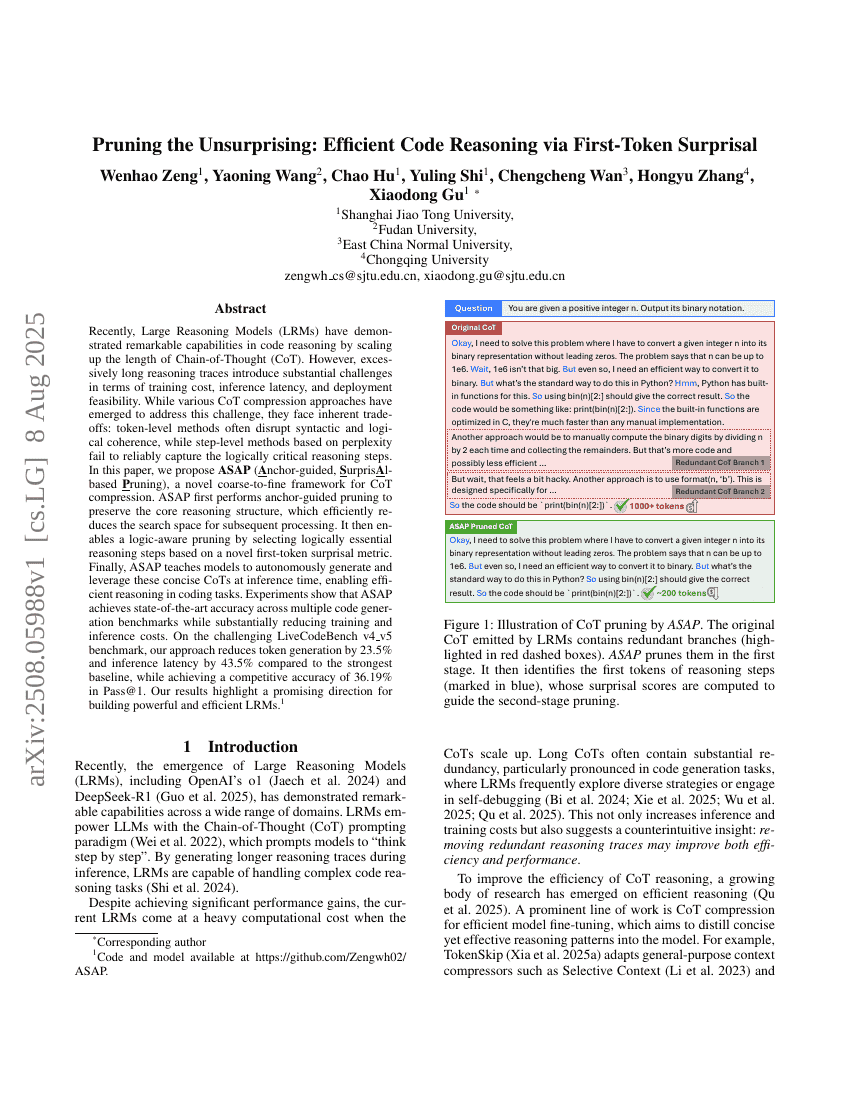

Recently, Large Reasoning Models (LRMs) have demonstrated remarkablecapabilities in code reasoning by scaling up the length of Chain-of-Thought(CoT). However, excessively long reasoning traces introduce substantialchallenges in terms of training cost, inference latency, and deploymentfeasibility. While various CoT compression approaches have emerged to addressthis challenge, they face inherent trade-offs: token-level methods oftendisrupt syntactic and logical coherence, while step-level methods based onperplexity fail to reliably capture the logically critical reasoning steps. Inthis paper, we propose ASAP (Anchor-guided, Surprisal-based Pruning), a novelcoarse-to-fine framework for CoT compression. ASAP first performs anchor-guidedpruning to preserve the core reasoning structure, which efficiently reduces thesearch space for subsequent processing. It then enables a logic-aware pruningby selecting logically essential reasoning steps based on a novel first-tokensurprisal metric. Finally, ASAP teaches models to autonomously generate andleverage these concise CoTs at inference time, enabling efficient reasoning incoding tasks. Experiments show that ASAP achieves state-of-the-art accuracyacross multiple code generation benchmarks while substantially reducingtraining and inference costs. On the challenging LiveCodeBench v4_v5 benchmark,our approach reduces token generation by 23.5% and inference latency by 43.5%compared to the strongest baseline, while achieving a competitive accuracy of36.19% in Pass@1. Our results highlight a promising direction for buildingpowerful and efficient LRMs.

Code Repositories

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.