Context-Dependent Sentiment Analysis in User-Generated Videos

Context-Dependent Sentiment Analysis in User-Generated Videos

{Louis-Philippe Morency Amir Zadeh Soujanya Poria Navonil Majumder Erik Cambria Devamanyu Hazarika}

Abstract

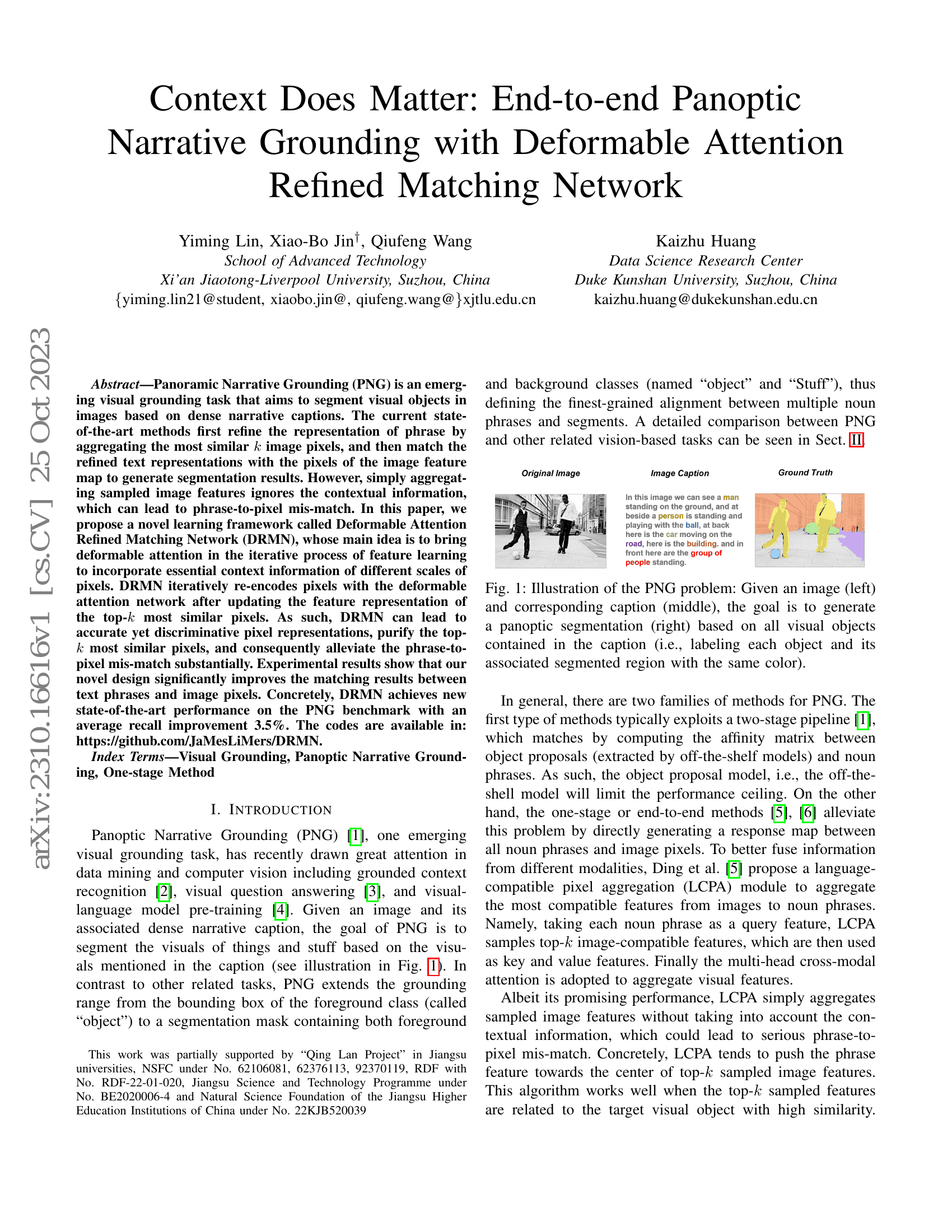

Multimodal sentiment analysis is a developing area of research, which involves the identification of sentiments in videos. Current research considers utterances as independent entities, i.e., ignores the interdependencies and relations among the utterances of a video. In this paper, we propose a LSTM-based model that enables utterances to capture contextual information from their surroundings in the same video, thus aiding the classification process. Our method shows 5-10{%} performance improvement over the state of the art and high robustness to generalizability.

Code Repositories

Benchmarks

| Benchmark | Methodology | Metrics |

|---|---|---|

| emotion-recognition-in-conversation-on | bc-LSTM+Att | Accuracy: 59.09 Macro-F1: 56.52 Weighted-F1: 58.54 |

| emotion-recognition-in-conversation-on-cped | bcLSTM | Accuracy of Sentiment: 49.65 Macro-F1 of Sentiment: 45.40 |

| emotion-recognition-in-conversation-on-meld | bc-LSTM+Att | Accuracy: 57.50 Weighted-F1: 56.44 |

| multimodal-sentiment-analysis-on-mosi | bc-LSTM | Accuracy: 80.3% |

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.