Command Palette

Search for a command to run...

MultiPL-MoE Architecture

MultiPL-MoE was proposed by the China Mobile Research Institute in August 2025, and the relevant research results were published in the paper "MultiPL-MoE: Multi-Programming-Lingual Extension of Large Language Models through Hybrid Mixture-of-ExpertsThe study has been accepted by EMNLP 2025.

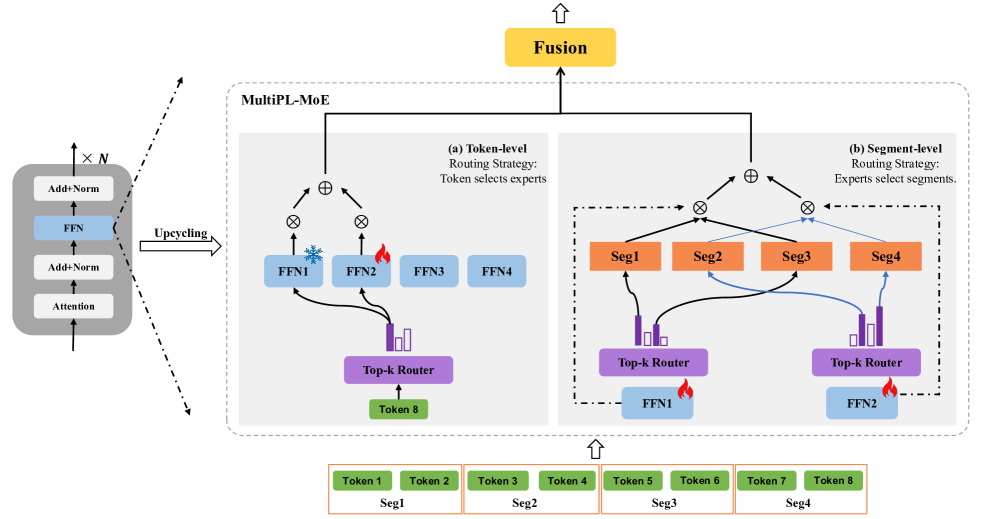

MultiPL-MoE is a multi-programming-language (MultiPL) extension LLMs implemented through hybrid expert hybrid (MoE). Unlike previous MoE methods for multi-natural-language extensions, which focus on internal language consistency by predicting subsequent tags but neglect the internal syntactic structure of programming languages, MultiPL-MoE employs a novel hybrid MoE architecture for fine-grained learning of tag-level semantic features and fragment-level syntactic features, enabling the model to infer code structure by recognizing and classifying different syntactic elements.

Build AI with AI

From idea to launch — accelerate your AI development with free AI co-coding, out-of-the-box environment and best price of GPUs.