Command Palette

Search for a command to run...

摘要

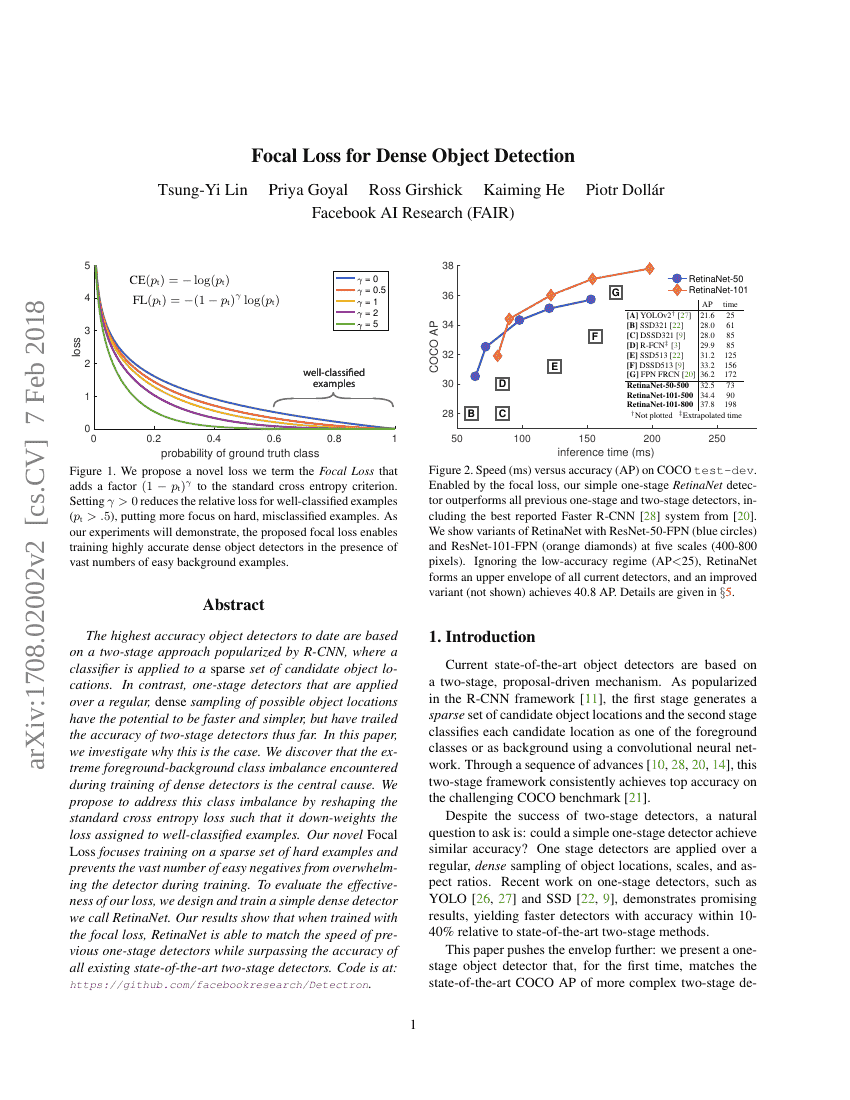

迄今为止精度最高的目标检测器均基于由R-CNN提出的两阶段方法,该方法对一组稀疏的候选目标位置应用分类器。相比之下,针对可能目标位置进行规则且密集采样的单阶段检测器具有更快、更简洁的潜力,但迄今为止其精度仍落后于两阶段检测器。本文旨在探究造成这一差距的根本原因。我们发现,密集检测器在训练过程中遭遇的极端前景-背景类别不平衡是核心问题。为此,我们提出通过重构标准交叉熵损失函数来缓解类别不平衡问题,使模型对分类正确的样本赋予较低的损失权重。我们提出的新型Focal Loss能够将训练重点集中于少数困难样本,有效防止大量简单负样本在训练过程中淹没检测器。为验证该损失函数的有效性,我们设计并训练了一种简单的密集检测器,命名为RetinaNet。实验结果表明,当使用Focal Loss进行训练时,RetinaNet在保持与以往单阶段检测器相当速度的同时,其精度超越了所有现有的最先进两阶段检测器。代码地址:https://github.com/facebookresearch/Detectron。

代码仓库

基准测试

| 基准 | 方法 | 指标 |

|---|---|---|

| 2d-object-detection-on-sardet-100k | RetinaNet | box mAP: 47.4 |

| dense-object-detection-on-sku-110k | RetinaNet | AP: 45.5 AP75: .389 |

| face-identification-on-trillion-pairs-dataset | F-Softmax | Accuracy: 39.80 |

| face-verification-on-trillion-pairs-dataset | F-Softmax | Accuracy: 37.14 |

| long-tail-learning-on-coco-mlt | Focal Loss(ResNet-50) | Average mAP: 49.46 |

| long-tail-learning-on-egtea | Focal loss (3D- ResNeXt101) | Average Precision: 59.09 Average Recall: 59.17 |

| long-tail-learning-on-voc-mlt | Focal Loss(ResNet-50) | Average mAP: 73.88 |

| object-counting-on-carpk | RetinaNet (2018) | MAE: 24.58 |

| object-detection-on-coco | RetinaNet (ResNet-101-FPN) | AP50: 59.1 AP75: 42.3 APL: 50.2 APM: 42.7 APS: 21.8 Hardware Burden: 4G Operations per network pass: box mAP: 39.1 |

| object-detection-on-coco | RetinaNet (ResNeXt-101-FPN) | AP50: 61.1 AP75: 44.1 APL: 51.2 APM: 44.2 APS: 24.1 Hardware Burden: 4G Operations per network pass: box mAP: 40.8 |

| object-detection-on-coco-o | RetinaNet (ResNet-50) | Average mAP: 16.6 Effective Robustness: 0.18 |

| pedestrian-detection-on-tju-ped-campus | RetinaNet | ALL (miss rate): 44.34 HO (miss rate): 71.31 R (miss rate): 34.73 R+HO (miss rate): 42.26 RS (miss rate): 82.99 |

| pedestrian-detection-on-tju-ped-traffic | RetinaNet | ALL (miss rate): 41.40 HO (miss rate): 61.60 R (miss rate): 23.89 R+HO (miss rate): 28.45 RS (miss rate): 37.92 |