Command Palette

Search for a command to run...

Victor SANH Lysandre DEBUT Julien CHAUMOND Thomas WOLF

摘要

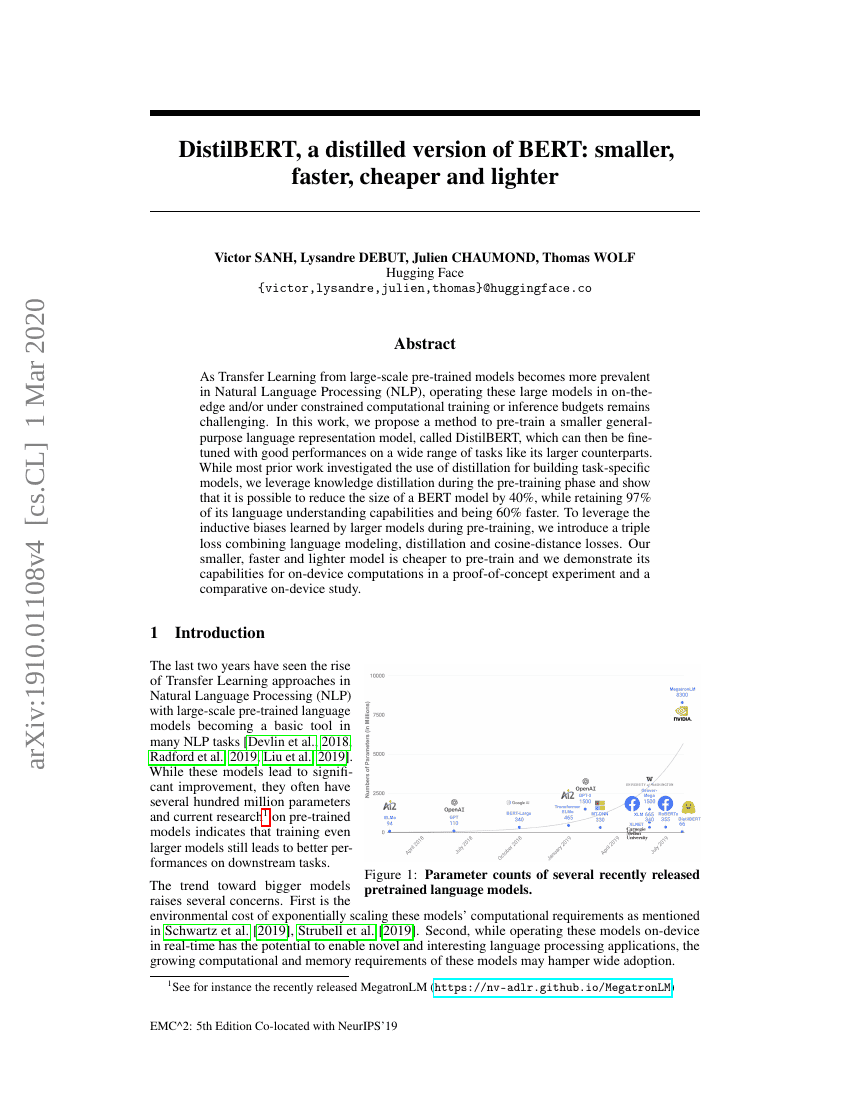

随着大规模预训练模型在自然语言处理(NLP)中的应用越来越广泛,这些大型模型在边缘设备上运行或在计算资源受限的情况下进行训练和推理仍然面临挑战。本文提出了一种方法,用于预训练一个较小的通用语言表示模型,称为DistilBERT,该模型可以在多种任务上进行微调并取得与其较大同类模型相当的良好性能。尽管先前的大多数研究集中在利用蒸馏技术构建特定任务的模型,我们在预训练阶段引入了知识蒸馏,并展示了可以将BERT模型的大小减少40%,同时保留其97%的语言理解能力,并且速度提高60%。为了利用大型模型在预训练过程中学到的归纳偏置,我们引入了一种结合语言建模、蒸馏和余弦距离损失的三重损失函数。我们的小型、快速且轻量级模型预训练成本更低,并通过概念验证实验和设备上的对比研究展示了其在设备端计算中的能力。

代码仓库

基准测试

| 基准 | 方法 | 指标 |

|---|---|---|

| linguistic-acceptability-on-cola | DistilBERT 66M | Accuracy: 49.1% |

| natural-language-inference-on-qnli | DistilBERT 66M | Accuracy: 90.2% |

| natural-language-inference-on-rte | DistilBERT 66M | Accuracy: 62.9% |

| natural-language-inference-on-wnli | DistilBERT 66M | Accuracy: 44.4 |

| question-answering-on-multitq | DistillBERT | Hits@1: 8.3 Hits@10: 48.4 |

| question-answering-on-quora-question-pairs | DistilBERT 66M | Accuracy: 89.2% |

| question-answering-on-squad11-dev | DistilBERT 66M | F1: 85.8 |

| question-answering-on-squad11-dev | DistilBERT | EM: 77.7 |

| semantic-textual-similarity-on-mrpc | DistilBERT 66M | Accuracy: 90.2% |

| semantic-textual-similarity-on-sts-benchmark | DistilBERT 66M | Pearson Correlation: 0.907 |

| sentiment-analysis-on-imdb | DistilBERT 66M | Accuracy: 92.82 |

| sentiment-analysis-on-sst-2-binary | DistilBERT 66M | Accuracy: 91.3 |

| task-1-grouping-on-ocw | DistilBERT (BASE) | # Correct Groups: 49 ± 4 # Solved Walls: 0 ± 0 Adjusted Mutual Information (AMI): 14.0 ± .3 Adjusted Rand Index (ARI): 11.3 ± .3 Fowlkes Mallows Score (FMS): 29.1 ± .2 Wasserstein Distance (WD): 86.7 ± .6 |