Command Palette

Search for a command to run...

Ze Liu Yutong Lin Yue Cao Han Hu Yixuan Wei Zheng Zhang Stephen Lin Baining Guo

摘要

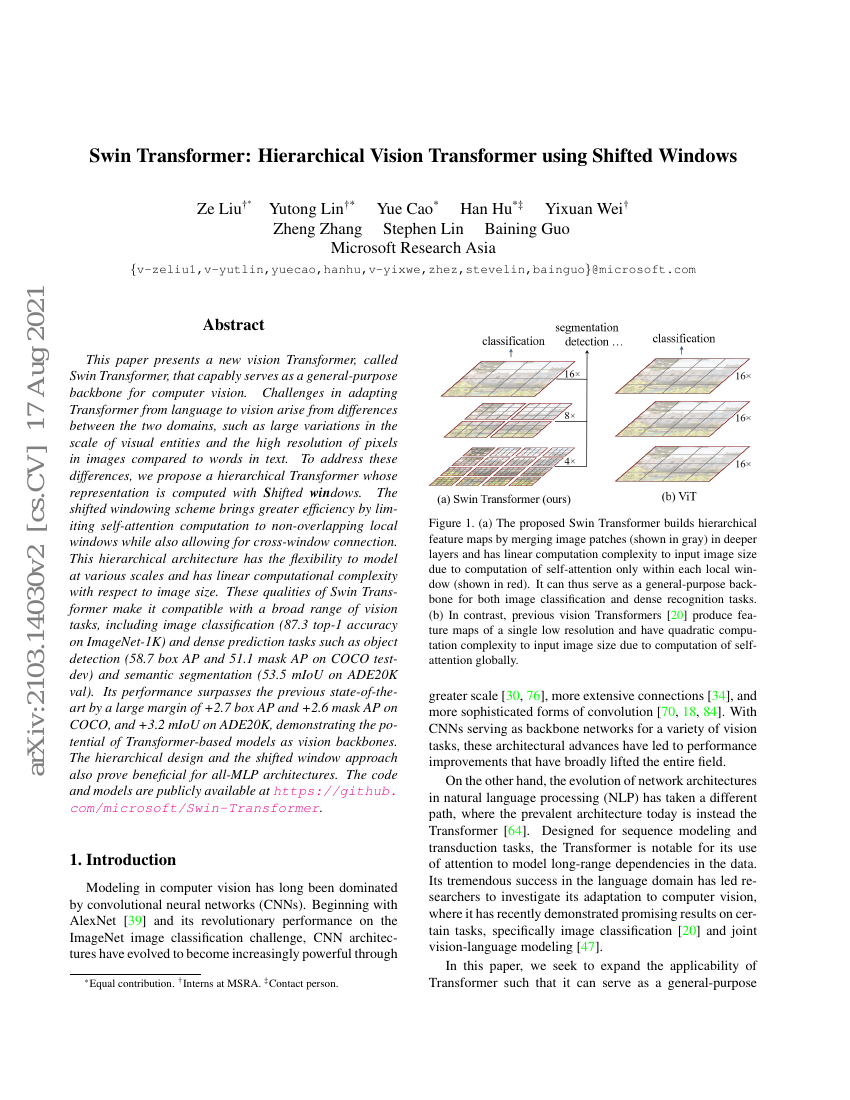

本文提出了一种新型视觉Transformer——Swin Transformer,能够作为计算机视觉领域的通用主干网络(backbone)。将Transformer从自然语言处理领域迁移到视觉任务面临诸多挑战,主要源于两个领域的本质差异:视觉实体的尺度变化极大,且图像像素的分辨率远高于文本中单词的表示粒度。为应对这些差异,我们设计了一种分层的Transformer架构,其特征表示通过移位窗口(Shifted Windows)机制进行计算。该移位窗口机制在保持自注意力计算局限于非重叠局部窗口以提升效率的同时,仍能实现跨窗口的信息交互。这种分层结构具备在多尺度上建模的灵活性,并且其计算复杂度与图像尺寸呈线性关系。上述特性使得Swin Transformer能够广泛适用于各类视觉任务,包括图像分类(在ImageNet-1K上达到87.3%的Top-1准确率)、密集预测任务如目标检测(在COCO test-dev上实现58.7 box AP和51.1 mask AP)以及语义分割(在ADE20K验证集上达到53.5 mIoU)。其性能显著超越此前的最先进方法,在COCO数据集上分别提升了+2.7 box AP和+2.6 mask AP,在ADE20K上提升了+3.2 mIoU,充分展示了基于Transformer的模型作为视觉主干网络的巨大潜力。此外,该分层设计与移位窗口策略对纯MLP架构也具有显著的提升作用。相关代码与预训练模型已公开发布于:https://github.com/microsoft/Swin-Transformer。

代码仓库

基准测试

| 基准 | 方法 | 指标 |

|---|---|---|

| image-classification-on-imagenet | Swin-B | GFLOPs: 47 Number of params: 88M Top 1 Accuracy: 86.4% |

| image-classification-on-imagenet | Swin-L | GFLOPs: 103.9 Number of params: 197M Top 1 Accuracy: 87.3% |

| image-classification-on-imagenet | Swin-T | GFLOPs: 4.5 Number of params: 29M Top 1 Accuracy: 81.3% |

| image-classification-on-omnibenchmark | SwinTransformer | Average Top-1 Accuracy: 46.4 |

| instance-segmentation-on-coco | Swin-L (HTC++, multi scale) | mask AP: 51.1 |

| instance-segmentation-on-coco | Swin-L (HTC++, single scale) | mask AP: 50.2 |

| instance-segmentation-on-coco-minival | Swin-L (HTC++, multi scale) | mask AP: 50.4 |

| instance-segmentation-on-coco-minival | Swin-L (HTC++, single scale) | mask AP: 49.5 |

| instance-segmentation-on-occluded-coco | Swin-S + Mask R-CNN | Mean Recall: 61.14 |

| instance-segmentation-on-occluded-coco | Swin-T + Mask R-CNN | Mean Recall: 58.81 |

| instance-segmentation-on-occluded-coco | Swin-B + Cascade Mask R-CNN | Mean Recall: 62.90 |

| instance-segmentation-on-separated-coco | Swin-B + Cascade Mask R-CNN | Mean Recall: 36.31 |

| instance-segmentation-on-separated-coco | Swin-S + Mask R-CNN | Mean Recall: 33.67 |

| instance-segmentation-on-separated-coco | Swin-T + Mask R-CNN | Mean Recall: 31.94 |

| object-detection-on-coco | Swin-L (HTC++, single scale) | box mAP: 57.7 |

| object-detection-on-coco | Swin-L (HTC++, multi scale) | box mAP: 58.7 |

| object-detection-on-coco-minival | Swin-L (HTC++, single scale) | box AP: 57.1 |

| object-detection-on-coco-minival | Swin-L (HTC++, multi scale) | box AP: 58 |

| semantic-segmentation-on-ade20k | Swin-B (UperNet, ImageNet-1k pretrain) | Validation mIoU: 49.7 |

| semantic-segmentation-on-ade20k | Swin-L (UperNet, ImageNet-22k pretrain) | Test Score: 62.8 Validation mIoU: 53.50 |

| semantic-segmentation-on-ade20k-val | Swin-L (UperNet, ImageNet-22k pretrain) | mIoU: 53.5 |

| semantic-segmentation-on-ade20k-val | Swin-B (UperNet, ImageNet-1k pretrain) | mIoU: 49.7 |

| semantic-segmentation-on-foodseg103 | Swin-Transformer (Swin-Small) | mIoU: 41.6 |

| thermal-image-segmentation-on-mfn-dataset | SwinT | mIOU: 49.0 |