Command Palette

Search for a command to run...

Mingxing Tan; Quoc V. Le

摘要

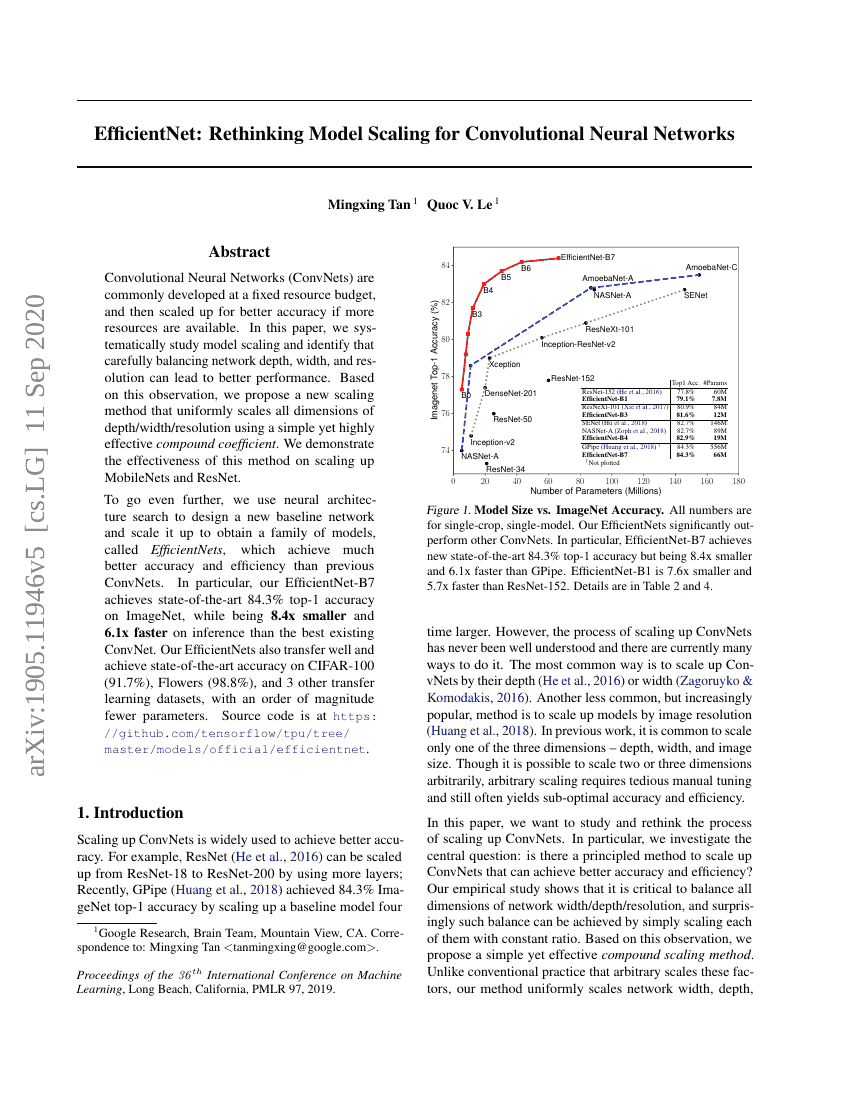

卷积神经网络(ConvNets)通常在固定的资源预算下开发,然后在有更多资源可用时进行扩展以提高精度。本文系统地研究了模型扩展,并发现仔细平衡网络深度、宽度和分辨率可以带来更好的性能。基于这一观察,我们提出了一种新的扩展方法,该方法使用一个简单但非常有效的复合系数来均匀地扩展深度、宽度和分辨率的所有维度。我们在扩大MobileNets和ResNet方面展示了这种方法的有效性。为了进一步提升性能,我们利用神经架构搜索设计了一个新的基线网络,并将其扩展以获得一系列模型,称为EfficientNets,这些模型在准确性和效率上均显著优于以往的ConvNets。特别是,我们的EfficientNet-B7在ImageNet数据集上达到了最先进的84.3%的Top-1精度,同时其规模比现有的最佳ConvNet小8.4倍,推理速度也快6.1倍。此外,我们的EfficientNets在网络迁移学习中表现优异,在CIFAR-100(91.7%)、Flowers(98.8%)和其他三个迁移学习数据集上均达到了最先进的精度,参数量却减少了数量级。源代码位于https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet。

代码仓库

基准测试

| 基准 | 方法 | 指标 |

|---|---|---|

| domain-generalization-on-vizwiz | EfficientNet-B4 | Accuracy - All Images: 41.7 Accuracy - Clean Images: 46.4 Accuracy - Corrupted Images: 35.6 |

| domain-generalization-on-vizwiz | EfficientNet-B2 | Accuracy - All Images: 38.1 Accuracy - Clean Images: 42.8 Accuracy - Corrupted Images: 31.4 |

| domain-generalization-on-vizwiz | EfficientNet-B1 | Accuracy - All Images: 36.7 Accuracy - Clean Images: 41.5 Accuracy - Corrupted Images: 30.9 |

| domain-generalization-on-vizwiz | EfficientNet-B5 | Accuracy - All Images: 42.8 Accuracy - Clean Images: 47.3 Accuracy - Corrupted Images: 37 |

| domain-generalization-on-vizwiz | EfficientNet-B3 | Accuracy - All Images: 40.7 Accuracy - Clean Images: 45.3 Accuracy - Corrupted Images: 34.2 |

| domain-generalization-on-vizwiz | EfficientNet-B0 | Accuracy - All Images: 34.2 Accuracy - Clean Images: 38.4 Accuracy - Corrupted Images: 27.4 |

| fine-grained-image-classification-on-birdsnap | EfficientNet-B7 | Accuracy: 84.3% |

| fine-grained-image-classification-on-fgvc | EfficientNet-B7 | Accuracy: 92.9 |

| fine-grained-image-classification-on-food-101 | EfficientNet-B7 | Accuracy: 93.0 |

| fine-grained-image-classification-on-oxford-1 | EfficientNet-B7 | Accuracy: 95.4% |

| fine-grained-image-classification-on-stanford | EfficientNet-B7 | Accuracy: 94.7% |

| image-classification-on-cifar-10 | EfficientNet-B7 | Percentage correct: 98.9 |

| image-classification-on-cifar-100 | EfficientNet-B7 | PARAMS: 64M Percentage correct: 91.7 |

| image-classification-on-flowers-102 | EfficientNet-B7 | Accuracy: 98.8% |

| image-classification-on-gashissdb | EfficientNet-b0 | Accuracy: 98.11 F1-Score: 99.01 Precision: 99.94 |

| image-classification-on-imagenet | EfficientNet-B7 | GFLOPs: 37 Number of params: 66M Top 1 Accuracy: 84.4% |

| image-classification-on-imagenet | EfficientNet-B2 | GFLOPs: 1 Number of params: 9.2M Top 1 Accuracy: 79.8% |

| image-classification-on-imagenet | EfficientNet-B3 | Number of params: 12M Top 1 Accuracy: 81.1% |

| image-classification-on-imagenet | EfficientNet-B0 | GFLOPs: 0.39 Number of params: 5.3M Top 1 Accuracy: 76.3% |

| image-classification-on-imagenet | EfficientNet-B6 | GFLOPs: 19 Number of params: 43M Top 1 Accuracy: 84% |

| image-classification-on-imagenet | EfficientNet-B4 | GFLOPs: 4.2 Number of params: 19M Top 1 Accuracy: 82.6% |

| image-classification-on-imagenet | EfficientNet-B1 | GFLOPs: 0.7 Number of params: 7.8M Top 1 Accuracy: 78.8% |

| image-classification-on-imagenet | EfficientNet-B5 | GFLOPs: 9.9 Number of params: 30M Top 1 Accuracy: 83.3% |

| image-classification-on-omnibenchmark | EfficientNetB4 | Average Top-1 Accuracy: 35.8 |

| medical-image-classification-on-nct-crc-he | Efficientnet-b0 | Accuracy (%): 95.59 F1-Score: 97.48 Precision: 99.89 Specificity: 99.45 |